使用Python抓取 Reddit

在本文中,我们将看到如何使用Python抓取 Reddit,这里我们将使用 Python 的 PRAW(Python Reddit API Wrapper)模块抓取数据。 Praw 是Python Reddit API 包装器的首字母缩略词,它允许通过Python脚本实现 Reddit API。

安装

要安装 PRAW,请在命令提示符下运行以下命令:

pip install praw创建 Reddit 应用程序

第 1 步:要从 Reddit 中提取数据,我们需要创建一个 Reddit 应用程序。您可以创建一个新的 Reddit 应用程序 (https://www.reddit.com/prefs/apps)。

Reddit – 创建一个应用程序

第二步:点击“你是开发者吗?创建一个应用程序……”。

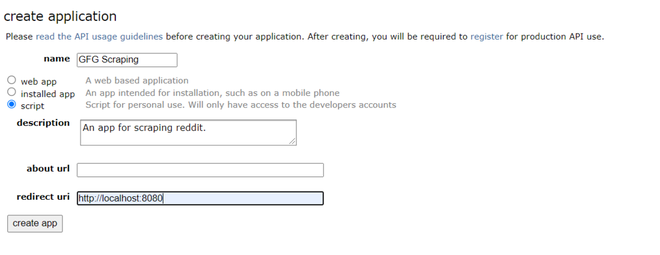

第 3 步:您的屏幕上将显示这样的表格。输入您选择的名称和描述。在重定向 uri框中,输入http://localhost:8080

申请表

第四步:输入详细信息后,点击“创建应用”。

开发应用

Reddit 应用程序已创建。现在,我们可以使用Python和 praw 从 Reddit 中抓取数据。记下 client_id、secret 和 user_agent 值。这些值将用于使用Python连接到 Reddit。

创建 PRAW 实例

为了连接到 Reddit,我们需要创建一个 praw 实例。有两种类型的 praw 实例:

- 只读实例:使用只读实例,我们只能抓取 Reddit 上的公开信息。例如,从特定 subreddit 中检索前 5 个帖子。

- 授权实例:使用授权实例,您可以使用您的 Reddit 帐户完成您所做的一切。可以执行诸如点赞、发帖、评论等操作。

Python3

# Read-only instance

reddit_read_only = praw.Reddit(client_id="", # your client id

client_secret="", # your client secret

user_agent="") # your user agent

# Authorized instance

reddit_authorized = praw.Reddit(client_id="", # your client id

client_secret="", # your client secret

user_agent="", # your user agent

username="", # your reddit username

password="") # your reddit passwordPython3

import praw

import pandas as pd

reddit_read_only = praw.Reddit(client_id="", # your client id

client_secret="", # your client secret

user_agent="") # your user agent

subreddit = reddit_read_only.subreddit("redditdev")

# Display the name of the Subreddit

print("Display Name:", subreddit.display_name)

# Display the title of the Subreddit

print("Title:", subreddit.title)

# Display the description of the Subreddit

print("Description:", subreddit.description)Python3

subreddit = reddit_read_only.subreddit("Python")

for post in subreddit.hot(limit=5):

print(post.title)

print()Python3

posts = subreddit.top("month")

# Scraping the top posts of the current month

posts_dict = {"Title": [], "Post Text": [],

"ID": [], "Score": [],

"Total Comments": [], "Post URL": []

}

for post in posts:

# Title of each post

posts_dict["Title"].append(post.title)

# Text inside a post

posts_dict["Post Text"].append(post.selftext)

# Unique ID of each post

posts_dict["ID"].append(post.id)

# The score of a post

posts_dict["Score"].append(post.score)

# Total number of comments inside the post

posts_dict["Total Comments"].append(post.num_comments)

# URL of each post

posts_dict["Post URL"].append(post.url)

# Saving the data in a pandas dataframe

top_posts = pd.DataFrame(posts_dict)

top_postsPython3

import pandas as pd

top_posts.to_csv("Top Posts.csv", index=True)Python3

import praw

import pandas as pd

reddit_read_only = praw.Reddit(client_id="", # your client id

client_secret="", # your client secret

user_agent="") # your user agent

# URL of the post

url = "https://www.reddit.com/r/IAmA/comments/m8n4vt/\

im_bill_gates_cochair_of_the_bill_and_melinda/"

# Creating a submission object

submission = reddit_read_only.submission(url=url)Python3

from praw.models import MoreComments

post_comments = []

for comment in submission.comments:

if type(comment) == MoreComments:

continue

post_comments.append(comment.body)

# creating a dataframe

comments_df = pd.DataFrame(post_comments, columns=['comment'])

comments_df现在我们已经创建了一个实例,我们可以使用 Reddit 的 API 来提取数据。在本教程中,我们将仅使用只读实例。

抓取 Reddit 子版块

从 subreddit 中提取数据的方法有多种。 subreddit 中的帖子按热门、新、热门、有争议等进行排序。您可以使用任何您选择的排序方法。

让我们从 redditdev subreddit 中提取一些信息。

蟒蛇3

import praw

import pandas as pd

reddit_read_only = praw.Reddit(client_id="", # your client id

client_secret="", # your client secret

user_agent="") # your user agent

subreddit = reddit_read_only.subreddit("redditdev")

# Display the name of the Subreddit

print("Display Name:", subreddit.display_name)

# Display the title of the Subreddit

print("Title:", subreddit.title)

# Display the description of the Subreddit

print("Description:", subreddit.description)

输出:

名称、标题和描述

现在让我们从Python subreddit 中提取 5 个热门帖子:

蟒蛇3

subreddit = reddit_read_only.subreddit("Python")

for post in subreddit.hot(limit=5):

print(post.title)

print()

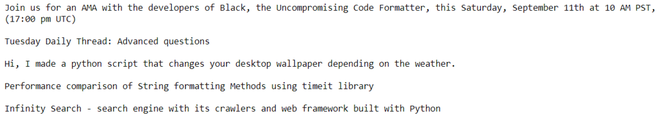

输出:

前 5 个热门帖子

现在,我们将在 Pandas 数据框中保存Python subreddit 的热门帖子:

蟒蛇3

posts = subreddit.top("month")

# Scraping the top posts of the current month

posts_dict = {"Title": [], "Post Text": [],

"ID": [], "Score": [],

"Total Comments": [], "Post URL": []

}

for post in posts:

# Title of each post

posts_dict["Title"].append(post.title)

# Text inside a post

posts_dict["Post Text"].append(post.selftext)

# Unique ID of each post

posts_dict["ID"].append(post.id)

# The score of a post

posts_dict["Score"].append(post.score)

# Total number of comments inside the post

posts_dict["Total Comments"].append(post.num_comments)

# URL of each post

posts_dict["Post URL"].append(post.url)

# Saving the data in a pandas dataframe

top_posts = pd.DataFrame(posts_dict)

top_posts

输出:

Python subreddit 的热门帖子

将数据导出到 CSV 文件:

蟒蛇3

import pandas as pd

top_posts.to_csv("Top Posts.csv", index=True)

输出:

热门帖子的 CSV 文件

抓取 Reddit 帖子:

要从 Reddit 帖子中提取数据,我们需要帖子的 URL。获得 URL 后,我们需要创建一个提交对象。

蟒蛇3

import praw

import pandas as pd

reddit_read_only = praw.Reddit(client_id="", # your client id

client_secret="", # your client secret

user_agent="") # your user agent

# URL of the post

url = "https://www.reddit.com/r/IAmA/comments/m8n4vt/\

im_bill_gates_cochair_of_the_bill_and_melinda/"

# Creating a submission object

submission = reddit_read_only.submission(url=url)

我们将从我们选择的帖子中提取最佳评论。我们将需要来自 praw 模块的 MoreComments 对象。为了提取评论,我们将在提交对象上使用 for 循环。所有评论都将添加到 post_comments 列表中。我们还将在 for 循环中添加一个 if 语句来检查是否有任何评论具有更多评论的对象类型。如果是,则意味着我们的帖子有更多评论可用。因此,我们也会将这些评论添加到我们的列表中。最后,我们将把列表转换成一个 Pandas 数据框。

蟒蛇3

from praw.models import MoreComments

post_comments = []

for comment in submission.comments:

if type(comment) == MoreComments:

continue

post_comments.append(comment.body)

# creating a dataframe

comments_df = pd.DataFrame(post_comments, columns=['comment'])

comments_df

输出:

列出熊猫数据框