神经网络是深度学习的核心,深度学习是一个在许多不同领域都有实际应用的领域。如今,神经网络已用于图像分类,语音识别,对象检测等。现在,让我们尝试了解所有这些最新技术背后的基本单位。

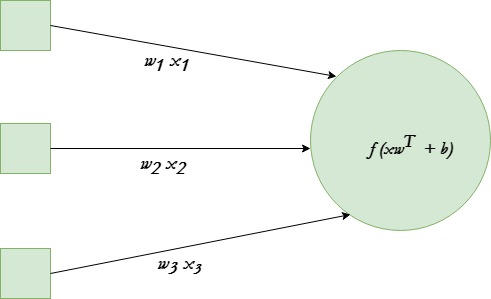

单个神经元将给定的输入转换为某些输出。根据给定的输入和分配给每个输入的权重,确定神经元是否触发。假设神经元具有3个输入连接和1个输出。

在给定的示例中,我们将使用tanh激活函数。

最终目标是为该神经元找到最佳的权重集,以产生正确的结果。通过使用几个不同的训练示例训练神经元来做到这一点。在每个步骤中,计算神经元输出中的误差,然后反向传播梯度。计算神经元输出的步骤称为正向传播,而计算梯度的步骤称为反向传播。

下面是实现:

# Python program to implement a

# single neuron neural network

# import all necessery libraries

from numpy import exp, array, random, dot, tanh

# Class to create a neural

# network with single neuron

class NeuralNetwork():

def __init__(self):

# Using seed to make sure it'll

# generate same weights in every run

random.seed(1)

# 3x1 Weight matrix

self.weight_matrix = 2 * random.random((3, 1)) - 1

# tanh as activation function

def tanh(self, x):

return tanh(x)

# derivative of tanh function.

# Needed to calculate the gradients.

def tanh_derivative(self, x):

return 1.0 - tanh(x) ** 2

# forward propagation

def forward_propagation(self, inputs):

return self.tanh(dot(inputs, self.weight_matrix))

# training the neural network.

def train(self, train_inputs, train_outputs,

num_train_iterations):

# Number of iterations we want to

# perform for this set of input.

for iteration in range(num_train_iterations):

output = self.forward_propagation(train_inputs)

# Calculate the error in the output.

error = train_outputs - output

# multiply the error by input and then

# by gradient of tanh funtion to calculate

# the adjustment needs to be made in weights

adjustment = dot(train_inputs.T, error *

self.tanh_derivative(output))

# Adjust the weight matrix

self.weight_matrix += adjustment

# Driver Code

if __name__ == "__main__":

neural_network = NeuralNetwork()

print ('Random weights at the start of training')

print (neural_network.weight_matrix)

train_inputs = array([[0, 0, 1], [1, 1, 1], [1, 0, 1], [0, 1, 1]])

train_outputs = array([[0, 1, 1, 0]]).T

neural_network.train(train_inputs, train_outputs, 10000)

print ('New weights after training')

print (neural_network.weight_matrix)

# Test the neural network with a new situation.

print ("Testing network on new examples ->")

print (neural_network.forward_propagation(array([1, 0, 0])))

输出 :

Random weights at the start of training

[[-0.16595599]

[ 0.44064899]

[-0.99977125]]

New weights after training

[[5.39428067]

[0.19482422]

[0.34317086]]

Testing network on new examples ->

[0.99995873]