如何在Python构建 Web 抓取机器人

在本文中,我们将了解如何使用Python构建网络抓取机器人。

Web Scraping 是从网站中提取数据的过程。 Bot 是一段代码,可以自动执行我们的任务。因此,网络抓取机器人是一个程序,它会根据我们的要求自动抓取网站的数据。

需要的模块

- bs4 : Beautiful Soup(bs4) 是一个Python库,用于从 HTML 和 XML 文件中提取数据。这个模块没有内置于Python。要安装此类型,请在终端中输入以下命令。

pip install bs4

- requests : Request 允许您非常轻松地发送 HTTP/1.1 请求。这个模块也没有内置于Python。要安装此类型,请在终端中输入以下命令。

pip install requests

- Selenium : Selenium是最流行的自动化测试工具之一。它可用于自动化浏览器,如 Chrome、Firefox、Safari 等。

pip install selenium

方法一:使用Selenium

我们需要安装一个 chrome 驱动程序来自动使用selenium,我们的任务是创建一个机器人,它将持续抓取谷歌新闻网站并每 10 分钟显示所有标题。

分步实施:

第 1 步:首先我们将导入一些必需的模块。

Python3

# These are the imports to be made

import time

from selenium import webdriver

from datetime import datetimePython3

# path of the chromedriver we have just downloaded

PATH = r"D:\chromedriver"

driver = webdriver.Chrome(PATH) # to open the browser

# url of google news website

url = 'https://news.google.com/topstories?hl=en-IN&gl=IN&ceid=IN:en'

# to open the url in the browser

driver.get(url)Python3

# Xpath you just copied

news_path = '/html/body/c-wiz/div/div[2]/div[2]/\

div/main/c-wiz/div[1]/div[3]/div/div/article/h3/a'

# to get that element

link = driver.find_element_by_xpath(news_path)

# to read the text from that element

print(link.text)Python3

# I have used f-strings to format the string

c = 1

for x in range(3, 9):

print(f"Heading {c}: ")

c += 1

curr_path = f'/html/body/c-wiz/div/div[2]/div[2]/div/main\

/c-wiz/div[1]/div[{x}]/div/div/article/h3/a'

title = driver.find_element_by_xpath(curr_path)

print(title.text)Python3

import time

from selenium import webdriver

from datetime import datetime

PATH = r"D:\chromedriver"

driver = webdriver.Chrome(PATH)

url = 'https://news.google.com/topstories?hl=en-IN&gl=IN&ceid=IN:en'

driver.get(url)

while(True):

now = datetime.now()

# this is just to get the time at the time of

# web scraping

current_time = now.strftime("%H:%M:%S")

print(f'At time : {current_time} IST')

c = 1

for x in range(3, 9):

curr_path = ''

# Exception handling to handle unexpected changes

# in the structure of the website

try:

curr_path = f'/html/body/c-wiz/div/div[2]/div[2]/\

div/main/c-wiz/div[1]/div[{x}]/div/div/article/h3/a'

title = driver.find_element_by_xpath(curr_path)

except:

continue

print(f"Heading {c}: ")

c += 1

print(title.text)

# to stop the running of code for 10 mins

time.sleep(600)Python3

import requests

from bs4 import BeautifulSoup

import timePython3

url = 'https://finance.yahoo.com/cryptocurrencies/'

response = requests.get(url)

text = response.text

data = BeautifulSoup(text, 'html.parser')Python3

# since, headings are the first row of the table

headings = data.find_all('tr')[0]

headings_list = [] # list to store all headings

for x in headings:

headings_list.append(x.text)

# since, we require only the first ten columns

headings_list = headings_list[:10]

print('Headings are: ')

for column in headings_list:

print(column)Python3

# since we need only first five coins

for x in range(1, 6):

table = data.find_all('tr')[x]

c = table.find_all('td')

for x in c:

print(x.text, end=' ')

print('')Python3

import requests

from bs4 import BeautifulSoup

from datetime import datetime

import time

while(True):

now = datetime.now()

# this is just to get the time at the time of

# web scraping

current_time = now.strftime("%H:%M:%S")

print(f'At time : {current_time} IST')

response = requests.get('https://finance.yahoo.com/cryptocurrencies/')

text = response.text

html_data = BeautifulSoup(text, 'html.parser')

headings = html_data.find_all('tr')[0]

headings_list = []

for x in headings:

headings_list.append(x.text)

headings_list = headings_list[:10]

data = []

for x in range(1, 6):

row = html_data.find_all('tr')[x]

column_value = row.find_all('td')

dict = {}

for i in range(10):

dict[headings_list[i]] = column_value[i].text

data.append(dict)

for coin in data:

print(coin)

print('')

time.sleep(600)Python3

import requests

from bs4 import BeautifulSoup

from datetime import datetime

import time

# keep_alive function, that maintains continious

# running of the code.

from keep_alive import keep_alive

import pytz

# to start the thread

keep_alive()

while(True):

tz_NY = pytz.timezone('Asia/Kolkata')

datetime_NY = datetime.now(tz_NY)

# this is just to get the time at the time of web scraping

current_time = datetime_NY.strftime("%H:%M:%S - (%d/%m)")

print(f'At time : {current_time} IST')

response = requests.get('https://finance.yahoo.com/cryptocurrencies/')

text = response.text

html_data = BeautifulSoup(text, 'html.parser')

headings = html_data.find_all('tr')[0]

headings_list = []

for x in headings:

headings_list.append(x.text)

headings_list = headings_list[:10]

data = []

for x in range(1, 6):

row = html_data.find_all('tr')[x]

column_value = row.find_all('td')

dict = {}

for i in range(10):

dict[headings_list[i]] = column_value[i].text

data.append(dict)

for coin in data:

print(coin)

time.sleep(60)Python3

from flask import Flask

from threading import Thread

app = Flask('')

@app.route('/')

def home():

return "Hello. the bot is alive!"

def run():

app.run(host='0.0.0.0',port=8080)

def keep_alive():

t = Thread(target=run)

t.start()第 2 步:下一步是打开所需的网站。

蟒蛇3

# path of the chromedriver we have just downloaded

PATH = r"D:\chromedriver"

driver = webdriver.Chrome(PATH) # to open the browser

# url of google news website

url = 'https://news.google.com/topstories?hl=en-IN&gl=IN&ceid=IN:en'

# to open the url in the browser

driver.get(url)

输出:

第三步:从网页中提取新闻标题,为了提取页面的特定部分,我们需要它的XPath,可以通过右键单击所需元素并在下拉栏中选择Inspect来访问它。

单击“检查”后会出现一个窗口。从那里,我们必须复制完整的 XPath 元素才能访问它:

注意:您可能无法通过检查(取决于网站的结构)始终获得所需的确切元素,因此您可能需要浏览 HTML 代码一段时间才能获得所需的确切元素。现在,只需复制该路径并将其粘贴到您的代码中即可。运行所有这些代码行后,您将获得打印在终端上的第一个标题的标题。

蟒蛇3

# Xpath you just copied

news_path = '/html/body/c-wiz/div/div[2]/div[2]/\

div/main/c-wiz/div[1]/div[3]/div/div/article/h3/a'

# to get that element

link = driver.find_element_by_xpath(news_path)

# to read the text from that element

print(link.text)

输出:

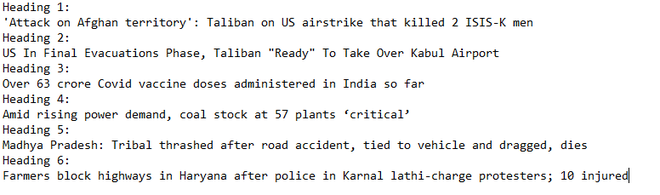

‘Attack on Afghan territory’: Taliban on US airstrike that killed 2 ISIS-K men

第 4 步:现在,目标是获取所有当前标题的 X_Paths。

一种方法是我们可以复制所有标题的所有XPath(每次google新闻中大约有6个标题),我们可以获取所有这些,但是如果要处理的内容很多,这种方法不适合报废。因此,优雅的方法是找到标题的 XPath 模式,这将使我们的任务更容易和高效。下面是网站上所有标题的XPaths,让我们弄清楚其中的模式。

/html/body/c-wiz/div/div[2]/div[2]/div/main/c-wiz/div[1]/div[3]/div/div/article/h3/a

/html/body/c-wiz/div/div[2]/div[2]/div/main/c-wiz/div[1]/div[4]/div/div/article/h3/a

/html/body/c-wiz/div/div[2]/div[2]/div/main/c-wiz/div[1]/div[5]/div/div/article/h3/a

/html/body/c-wiz/div/div[2]/div[2]/div/main/c-wiz/div[1]/div[6]/div/div/article/h3/a

/html/body/c-wiz/div/div[2]/div[2]/div/main/c-wiz/div[1]/div[7]/div/div/article/h3/a

/html/body/c-wiz/div/div[2]/div[2]/div/main/c-wiz/div[1]/div[8]/div/div/article/h3/a

因此,通过查看这些 XPath,我们可以看到只有第 5 个 div 发生了变化(粗体)。所以基于此,我们可以生成所有标题的 XPath。我们将通过使用 XPath 访问页面中的所有标题来获取它们。所以为了提取所有这些,我们有这样的代码

蟒蛇3

# I have used f-strings to format the string

c = 1

for x in range(3, 9):

print(f"Heading {c}: ")

c += 1

curr_path = f'/html/body/c-wiz/div/div[2]/div[2]/div/main\

/c-wiz/div[1]/div[{x}]/div/div/article/h3/a'

title = driver.find_element_by_xpath(curr_path)

print(title.text)

输出:

现在,代码几乎完成了,我们要做的最后一件事是代码应该每 10 分钟成为头条新闻。所以我们将运行一个 while 循环并在获得所有头条新闻后睡眠 10 分钟。

下面是完整的实现

蟒蛇3

import time

from selenium import webdriver

from datetime import datetime

PATH = r"D:\chromedriver"

driver = webdriver.Chrome(PATH)

url = 'https://news.google.com/topstories?hl=en-IN&gl=IN&ceid=IN:en'

driver.get(url)

while(True):

now = datetime.now()

# this is just to get the time at the time of

# web scraping

current_time = now.strftime("%H:%M:%S")

print(f'At time : {current_time} IST')

c = 1

for x in range(3, 9):

curr_path = ''

# Exception handling to handle unexpected changes

# in the structure of the website

try:

curr_path = f'/html/body/c-wiz/div/div[2]/div[2]/\

div/main/c-wiz/div[1]/div[{x}]/div/div/article/h3/a'

title = driver.find_element_by_xpath(curr_path)

except:

continue

print(f"Heading {c}: ")

c += 1

print(title.text)

# to stop the running of code for 10 mins

time.sleep(600)

输出:

方法二:使用Requests和BeautifulSoup

requests 模块从网站获取原始 HTML 数据,并使用漂亮的汤来清楚地解析该信息以获得我们需要的确切数据。与Selenium不同,它不涉及浏览器安装,而且更轻,因为它无需浏览器的帮助即可直接访问网络。

分步实施:

第一步:导入模块。

蟒蛇3

import requests

from bs4 import BeautifulSoup

import time

第二步:接下来要做的是获取URL数据,然后解析HTML代码

蟒蛇3

url = 'https://finance.yahoo.com/cryptocurrencies/'

response = requests.get(url)

text = response.text

data = BeautifulSoup(text, 'html.parser')

第 3 步:首先,我们将从表中获取所有标题。

蟒蛇3

# since, headings are the first row of the table

headings = data.find_all('tr')[0]

headings_list = [] # list to store all headings

for x in headings:

headings_list.append(x.text)

# since, we require only the first ten columns

headings_list = headings_list[:10]

print('Headings are: ')

for column in headings_list:

print(column)

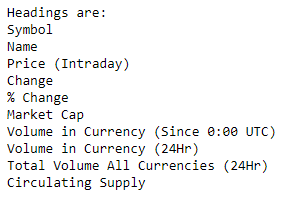

输出:

Step 4:同理,可以得到每一行的所有值

蟒蛇3

# since we need only first five coins

for x in range(1, 6):

table = data.find_all('tr')[x]

c = table.find_all('td')

for x in c:

print(x.text, end=' ')

print('')

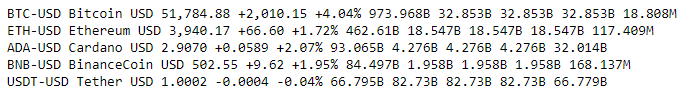

输出:

下面是完整的实现:

蟒蛇3

import requests

from bs4 import BeautifulSoup

from datetime import datetime

import time

while(True):

now = datetime.now()

# this is just to get the time at the time of

# web scraping

current_time = now.strftime("%H:%M:%S")

print(f'At time : {current_time} IST')

response = requests.get('https://finance.yahoo.com/cryptocurrencies/')

text = response.text

html_data = BeautifulSoup(text, 'html.parser')

headings = html_data.find_all('tr')[0]

headings_list = []

for x in headings:

headings_list.append(x.text)

headings_list = headings_list[:10]

data = []

for x in range(1, 6):

row = html_data.find_all('tr')[x]

column_value = row.find_all('td')

dict = {}

for i in range(10):

dict[headings_list[i]] = column_value[i].text

data.append(dict)

for coin in data:

print(coin)

print('')

time.sleep(600)

输出:

托管机器人

这是一种特定的方法,用于在不需要任何人工干预的情况下连续在线运行机器人。 replit.com 是一个在线编译器,我们将在其中运行代码。我们将在Python中的flask模块的帮助下创建一个迷你网络服务器,该模块有助于代码的连续运行。请在该网站上创建一个帐户并创建一个新的repl。

创建 repl 后,创建两个文件,一个用于运行机器人代码,另一个用于使用 Flask 创建 Web 服务器。

cryptotracker.py 的代码:

蟒蛇3

import requests

from bs4 import BeautifulSoup

from datetime import datetime

import time

# keep_alive function, that maintains continious

# running of the code.

from keep_alive import keep_alive

import pytz

# to start the thread

keep_alive()

while(True):

tz_NY = pytz.timezone('Asia/Kolkata')

datetime_NY = datetime.now(tz_NY)

# this is just to get the time at the time of web scraping

current_time = datetime_NY.strftime("%H:%M:%S - (%d/%m)")

print(f'At time : {current_time} IST')

response = requests.get('https://finance.yahoo.com/cryptocurrencies/')

text = response.text

html_data = BeautifulSoup(text, 'html.parser')

headings = html_data.find_all('tr')[0]

headings_list = []

for x in headings:

headings_list.append(x.text)

headings_list = headings_list[:10]

data = []

for x in range(1, 6):

row = html_data.find_all('tr')[x]

column_value = row.find_all('td')

dict = {}

for i in range(10):

dict[headings_list[i]] = column_value[i].text

data.append(dict)

for coin in data:

print(coin)

time.sleep(60)

keep_alive.py(网络服务器)的代码:

蟒蛇3

from flask import Flask

from threading import Thread

app = Flask('')

@app.route('/')

def home():

return "Hello. the bot is alive!"

def run():

app.run(host='0.0.0.0',port=8080)

def keep_alive():

t = Thread(target=run)

t.start()

Keep-alive 是网络中的一种方法,用于防止某个链接断开。这里保持活动代码的目的是使用flask创建一个Web服务器,这将使代码的线程(加密跟踪器代码)保持活动状态,以便它可以不断地提供更新。

现在,我们创建了一个 web 服务器,现在,我们需要一些东西来持续 ping 它,这样服务器就不会停机并且代码继续运行。有一个网站 uptimerobot.com 可以完成这项工作。在其中创建一个帐户

在 Replit 中运行加密跟踪器代码。因此,我们成功创建了一个网络抓取机器人,它将每 10 分钟连续抓取特定网站并将数据打印到终端。