在 OpenCV 中使用蛮力进行特征匹配

在本文中,我们将使用 OpenCV 库在Python使用 Brute Force 进行特征匹配。

先决条件: OpenCV

OpenCV 是一个Python库,用于解决计算机视觉问题。

OpenCV 是一个开源计算机视觉库。因此,计算机视觉是一种向机器教授智能并使它们像人类一样看待事物的方式。

换句话说,OpenCV 允许计算机像人类一样查看和处理视觉数据。

安装:

要安装 openCV 库,请在命令提示符中编写以下命令。

pip install opencv-python方法:

- 导入 OpenCV 库。

- 使用 i mread()函数加载图像并将图像的路径或名称作为参数传递。

- 创建用于检测图像特征的 ORB 检测器。

- 使用 ORB 检测器找到两个图像的关键点和描述符。

- 现在在检测图像的特征之后。现在编写用于匹配图像特征的 Brute Force Matcher 并将其存储在名为“ brute_force ”的变量中。

- 为了匹配,我们使用 brute_force.match() 并将第一张图像的描述符和第二张图像的描述符作为参数传递。

- 找到匹配项后,我们必须根据匹配项之间的嗡嗡声距离对匹配项进行排序,嗡嗡声距离越小,匹配的准确性就越好。

- 现在根据嗡嗡声距离排序后,我们必须绘制特征匹配,我们使用drawMatches()函数,其中传递第一张图像和第一张图像的关键点,第二张图像和第二张图像的关键点以及best_matches作为参数并将其存储在名为“ output_image ”的变量。

- 现在在绘制特征匹配后,我们必须查看匹配,我们使用 cv2 库中的imshow()函数并传递窗口名称和output_image 。

- 现在编写waitkey()函数并编写destroyAllWindows()来销毁所有窗口。

定向快速旋转简要 (ORB) 探测器

ORB检测器代表Oriented Fast and Rotated Brief,这是一种免费算法,该算法的好处是不需要GPU就可以在普通CPU上计算。

ORB基本上是涉及FAST和BRIEF的两种算法的组合,其中FAST代表来自加速段测试的特征,而BRIEF代表二进制稳健独立基本特征。

ORB检测器首先使用FAST算法,该FAST算法找到关键点,然后应用Harries角点测量在其中找到前N个关键点,该算法通过比较强度变化等不同区域来快速选择关键点。

该算法适用于关键点匹配,关键点是图像中的独特区域,如强度变化。

现在BRIEF算法的作用来了,该算法将关键点转化为只包含0和1组合的二进制描述符/二进制特征向量。

FAST算法创建的关键点和BRIEF算法创建的描述符共同代表对象。 Brief 是用于特征描述符计算的更快方法,并且它也提供高识别率,直到并且除非有大的平面内旋转。

蛮力匹配器

Brute Force Matcher 用于将第一张图像的特征与另一张图像进行匹配。

它采用第一个图像的一个描述符并匹配第二个图像的所有描述符,然后转到第一个图像的第二个描述符并匹配第二个图像的所有描述符,依此类推。

示例 1:使用OpenCV库从其路径读取/导入图像。

Python

# importing openCV library

import cv2

# function to read the images by taking there path

def read_image(path1,path2):

# reading the images from their using imread() function

read_img1 = cv2.imread(path1)

read_img2 = cv2.imread(path2)

return (read_img1,read_img2)

# function to convert images from RGB to gray scale

def convert_to_grayscale(pic1,pic2):

gray_img1 = cv2.cvtColor(pic1,cv2.COLOR_BGR2GRAY)

gray_img2 = cv2.cvtColor(pic2,cv2.COLOR_BGR2GRAY)

return (gray_img1,gray_img2)

# main function

if __name__ == '__main__':

# giving the path of both of the images

first_image_path = 'C:/UsersPython(ds)/1611755129039.jpg'

second_image_path = 'C:/Users/Python(ds)/1611755720390.jpg'

# reading the image from there path by calling the function

img1, img2 = read_image(first_image_path,second_image_path)

# converting the readed images into the gray scale images by calling the function

gray_pic1, gray_pic2 = convert_to_grayscale(img1,img2)

cv2.imshow('Gray scaled image 1',gray_pic1)

cv2.imshow('Gray scaled image 2',gray_pic2)

cv2.waitKey()

cv2.destroyAllWindows()Python

# importing openCV library

import cv2

# function to read the images by taking there path

def read_image(path1,path2):

read_img1 = cv2.imread(path1)

read_img2 = cv2.imread(path2)

return (read_img1,read_img2)

# function to convert images from RGB to gray scale

def convert_to_grayscale(pic1,pic2):

gray_img1 = cv2.cvtColor(pic1,cv2.COLOR_BGR2GRAY)

gray_img2 = cv2.cvtColor(pic2,cv2.COLOR_BGR2GRAY)

return (gray_img1,gray_img2)

# function to detect the features by finding key points and descriptors from the image

def detector(image1,image2):

# creating ORB detector

detect = cv2.ORB_create()

# finding key points and descriptors of both images using detectAndCompute() function

key_point1,descrip1 = detect.detectAndCompute(image1,None)

key_point2,descrip2 = detect.detectAndCompute(image2,None)

return (key_point1,descrip1,key_point2,descrip2)

# main function

if __name__ == '__main__':

# giving the path of both of the images

first_image_path = 'C:/Users/Python(ds)//1611755129039.jpg'

second_image_path = 'C:/Users/Python(ds)/1611755720390.jpg'

# reading the image from there paths

img1, img2 = read_image(first_image_path,second_image_path)

# converting the readed images into the gray scale images

gray_pic1, gray_pic2 = convert_to_grayscale(img1,img2)

# storing the finded key points and descriptors of both of the images

key_pt1,descrip1,key_pt2,descrip2 = detector(gray_pic1,gray_pic2)

# showing the images with their key points finded by the detector

cv2.imshow("Key points of Image 1",cv2.drawKeypoints(gray_pic1,key_pt1,None))

cv2.imshow("Key points of Image 2",cv2.drawKeypoints(gray_pic2,key_pt2,None))

# printing descriptors of both of the images

print(f'Descriptors of Image 1 {descrip1}')

print(f'Descriptors of Image 2 {descrip2}')

print('------------------------------')

# printing the Shape of the descriptors

print(f'Shape of descriptor of first image {descrip1.shape}')

print(f'Shape of descriptor of second image {descrip2.shape}')

cv2.waitKey()

cv2.destroyAllWindows()Python

# importing openCV library

import cv2

# function to read the images by taking there path

def read_image(path1,path2):

read_img1 = cv2.imread(path1)

read_img2 = cv2.imread(path2)

return (read_img1,read_img2)

# function to convert images from RGB to gray scale

def convert_to_grayscale(pic1,pic2):

gray_img1 = cv2.cvtColor(pic1,cv2.COLOR_BGR2GRAY)

gray_img2 = cv2.cvtColor(pic2,cv2.COLOR_BGR2GRAY)

return (gray_img1,gray_img2)

# function to detect the features by finding key points

# and descriptors from the image

def detector(image1,image2):

# creating ORB detector

detect = cv2.ORB_create()

# finding key points and descriptors of both images using

# detectAndCompute() function

key_point1,descrip1 = detect.detectAndCompute(image1,None)

key_point2,descrip2 = detect.detectAndCompute(image2,None)

return (key_point1,descrip1,key_point2,descrip2)

# function to find best detected features using brute force

# matcher and match them according to there humming distance

def BF_FeatureMatcher(des1,des2):

brute_force = cv2.BFMatcher(cv2.NORM_HAMMING,crossCheck=True)

no_of_matches = brute_force.match(des1,des2)

# finding the humming distance of the matches and sorting them

no_of_matches = sorted(no_of_matches,key=lambda x:x.distance)

return no_of_matches

# function displaying the output image with the feature matching

def display_output(pic1,kpt1,pic2,kpt2,best_match):

# drawing the feature matches using drawMatches() function

output_image = cv2.drawMatches(pic1,kpt1,pic2,kpt2,best_match,None,flags=2)

cv2.imshow('Output image',output_image)

# main function

if __name__ == '__main__':

# giving the path of both of the images

first_image_path = 'C:/Users/Python(ds)/1611755129039.jpg'

second_image_path = 'C:/Users/Python(ds)/1611755720390.jpg'

# reading the image from there paths

img1, img2 = read_image(first_image_path,second_image_path)

# converting the readed images into the gray scale images

gray_pic1, gray_pic2 = convert_to_grayscale(img1,img2)

# storing the finded key points and descriptors of both of the images

key_pt1,descrip1,key_pt2,descrip2 = detector(gray_pic1,gray_pic2)

# sorting the number of best matches obtained from brute force matcher

number_of_matches = BF_FeatureMatcher(descrip1,descrip2)

tot_feature_matches = len(number_of_matches)

# printing total number of feature matches found

print(f'Total Number of Features matches found are {tot_feature_matches}')

# after drawing the feature matches displaying the output image

display_output(gray_pic1,key_pt1,gray_pic2,key_pt2,number_of_matches)

cv2.waitKey()

cv2.destroyAllWindows()Python

# importing openCV library

import cv2

# function to read the images by taking there path

def read_image(path1,path2):

read_img1 = cv2.imread(path1)

read_img2 = cv2.imread(path2)

return (read_img1,read_img2)

# function to convert images from RGB to gray scale

def convert_to_grayscale(pic1,pic2):

gray_img1 = cv2.cvtColor(pic1,cv2.COLOR_BGR2GRAY)

gray_img2 = cv2.cvtColor(pic2,cv2.COLOR_BGR2GRAY)

return (gray_img1,gray_img2)

# function to detect the features by finding key points

# and descriptors from the image

def detector(image1,image2):

# creating ORB detector

detect = cv2.ORB_create()

# finding key points and descriptors of both images

# using detectAndCompute() function

key_point1,descrip1 = detect.detectAndCompute(image1,None)

key_point2,descrip2 = detect.detectAndCompute(image2,None)

return (key_point1,descrip1,key_point2,descrip2)

# function to find best detected features using

# brute force matcher and match them according to there humming distance

def BF_FeatureMatcher(des1,des2):

brute_force = cv2.BFMatcher(cv2.NORM_HAMMING,crossCheck=True)

no_of_matches = brute_force.match(des1,des2)

# finding the humming distance of the matches and sorting them

no_of_matches = sorted(no_of_matches,key=lambda x:x.distance)

return no_of_matches

# function displaying the output image with the feature matching

def display_output(pic1,kpt1,pic2,kpt2,best_match):

# drawing first fifteen best feature matches using drawMatches() function

output_image = cv2.drawMatches(pic1,kpt1,pic2,

kpt2,best_match[:15],None,flags=2)

cv2.imshow('Output image',output_image)

# main function

if __name__ == '__main__':

# giving the path of both of the images

first_image_path = 'C:/Users/Python(ds)/1611755129039.jpg'

second_image_path = 'C:/Users/Python(ds)/1611755720390.jpg'

# reading the image from there paths

img1, img2 = read_image(first_image_path,second_image_path)

# converting the readed images into the gray scale images

gray_pic1, gray_pic2 = convert_to_grayscale(img1,img2)

# storing the finded key points and descriptors of both of the images

key_pt1,descrip1,key_pt2,descrip2 = detector(gray_pic1,gray_pic2)

# sorting the number of best matches obtained from brute force matcher

number_of_matches = BF_FeatureMatcher(descrip1,descrip2)

# after drawing the feature matches displaying the output image

display_output(gray_pic1,key_pt1,gray_pic2,key_pt2,number_of_matches)

cv2.waitKey()

cv2.destroyAllWindows()Python

# importing openCV library

import cv2

# function to read the images by taking there path

def read_image(path1,path2):

read_img1 = cv2.imread(path1)

read_img2 = cv2.imread(path2)

return (read_img1,read_img2)

# function to convert images from RGB to gray scale

def convert_to_grayscale(pic1,pic2):

gray_img1 = cv2.cvtColor(pic1,cv2.COLOR_BGR2GRAY)

gray_img2 = cv2.cvtColor(pic2,cv2.COLOR_BGR2GRAY)

return (gray_img1,gray_img2)

# function to detect the features by finding key points and

# descriptors from the image

def detector(image1,image2):

# creating ORB detector

detect = cv2.ORB_create()

# finding key points and descriptors of both images

# using detectAndCompute() function

key_point1,descrip1 = detect.detectAndCompute(image1,None)

key_point2,descrip2 = detect.detectAndCompute(image2,None)

return (key_point1,descrip1,key_point2,descrip2)

# function to find best detected features using brute

# force matcher and match them according to there humming distance

def BF_FeatureMatcher(des1,des2):

brute_force = cv2.BFMatcher(cv2.NORM_HAMMING,crossCheck=True)

no_of_matches = brute_force.match(des1,des2)

# finding the humming distance of the matches and sorting them

no_of_matches = sorted(no_of_matches,key=lambda x:x.distance)

return no_of_matches

# function displaying the output image with the feature matching

def display_output(pic1,kpt1,pic2,kpt2,best_match):

# drawing the feature matches using drawMatches() function

output_image = cv2.drawMatches(pic1,kpt1,pic2,kpt2,

best_match[:30],None,flags=2)

cv2.imshow('Output image',output_image)

# main function

if __name__ == '__main__':

# giving the path of both of the images

first_image_path = 'C:/Users/Python(ds)/Titan_1.jpg'

second_image_path = 'C:/Users/Python(ds)/Titan_nor.jpg'

# reading the image from there paths

img1, img2 = read_image(first_image_path,second_image_path)

# converting the readed images into the gray scale images

gray_pic1, gray_pic2 = convert_to_grayscale(img1,img2)

# storing the finded key points and descriptors of both of the images

key_pt1,descrip1,key_pt2,descrip2 = detector(gray_pic1,gray_pic2)

# sorting the number of best matches obtained from brute force matcher

number_of_matches = BF_FeatureMatcher(descrip1,descrip2)

tot_feature_matches = len(number_of_matches)

print(f'Total Number of Features matches found are {tot_feature_matches}')

# after drawing the feature matches displaying the output image

display_output(gray_pic1,key_pt1,gray_pic2,key_pt2,number_of_matches)

cv2.waitKey()

cv2.destroyAllWindows()Python

# importing openCV library

import cv2

# function to read the images by taking there path

def read_image(path1,path2):

read_img1 = cv2.imread(path1)

read_img2 = cv2.imread(path2)

return (read_img1,read_img2)

# function to convert images from RGB to gray scale

def convert_to_grayscale(pic1,pic2):

gray_img1 = cv2.cvtColor(pic1,cv2.COLOR_BGR2GRAY)

gray_img2 = cv2.cvtColor(pic2,cv2.COLOR_BGR2GRAY)

return (gray_img1,gray_img2)

# function to detect the features by finding key points

# and descriptors from the image

def detector(image1,image2):

# creating ORB detector

detect = cv2.ORB_create()

# finding key points and descriptors of both images

# using detectAndCompute() function

key_point1,descrip1 = detect.detectAndCompute(image1,None)

key_point2,descrip2 = detect.detectAndCompute(image2,None)

return (key_point1,descrip1,key_point2,descrip2)

# function to find best detected features using brute

# force matcher and match them according to there humming distance

def BF_FeatureMatcher(des1,des2):

brute_force = cv2.BFMatcher(cv2.NORM_HAMMING,crossCheck=True)

no_of_matches = brute_force.match(des1,des2)

# finding the humming distance of the matches and sorting them

no_of_matches = sorted(no_of_matches,key=lambda x:x.distance)

return no_of_matches

# function displaying the output image with the feature matching

def display_output(pic1,kpt1,pic2,kpt2,best_match):

# drawing the feature matches using drawMatches() function

output_image = cv2.drawMatches(pic1,kpt1,pic2,

kpt2,best_match[:30],None,flags=2)

cv2.imshow('Output image',output_image)

# main function

if __name__ == '__main__':

# giving the path of both of the images

first_image_path = 'C:/Users/Python(ds)/Titan_1.jpg'

second_image_path = 'C:/Users/Python(ds)/Titan_rotated.jpg'

# reading the image from there paths

img1, img2 = read_image(first_image_path,second_image_path)

# converting the readed images into the gray scale images

gray_pic1, gray_pic2 = convert_to_grayscale(img1,img2)

# storing the finded key points and descriptors of both of the images

key_pt1,descrip1,key_pt2,descrip2 = detector(gray_pic1,gray_pic2)

# sorting the number of best matches obtained from brute force matcher

number_of_matches = BF_FeatureMatcher(descrip1,descrip2)

tot_feature_matches = len(number_of_matches)

print(f'Total Number of Features matches found are {tot_feature_matches}')

# after drawing the feature matches displaying the output image

display_output(gray_pic1,key_pt1,gray_pic2,key_pt2,number_of_matches)

cv2.waitKey()

cv2.destroyAllWindows()输出:

示例 2:创建 ORB 检测器以查找图像中的特征。

Python

# importing openCV library

import cv2

# function to read the images by taking there path

def read_image(path1,path2):

read_img1 = cv2.imread(path1)

read_img2 = cv2.imread(path2)

return (read_img1,read_img2)

# function to convert images from RGB to gray scale

def convert_to_grayscale(pic1,pic2):

gray_img1 = cv2.cvtColor(pic1,cv2.COLOR_BGR2GRAY)

gray_img2 = cv2.cvtColor(pic2,cv2.COLOR_BGR2GRAY)

return (gray_img1,gray_img2)

# function to detect the features by finding key points and descriptors from the image

def detector(image1,image2):

# creating ORB detector

detect = cv2.ORB_create()

# finding key points and descriptors of both images using detectAndCompute() function

key_point1,descrip1 = detect.detectAndCompute(image1,None)

key_point2,descrip2 = detect.detectAndCompute(image2,None)

return (key_point1,descrip1,key_point2,descrip2)

# main function

if __name__ == '__main__':

# giving the path of both of the images

first_image_path = 'C:/Users/Python(ds)//1611755129039.jpg'

second_image_path = 'C:/Users/Python(ds)/1611755720390.jpg'

# reading the image from there paths

img1, img2 = read_image(first_image_path,second_image_path)

# converting the readed images into the gray scale images

gray_pic1, gray_pic2 = convert_to_grayscale(img1,img2)

# storing the finded key points and descriptors of both of the images

key_pt1,descrip1,key_pt2,descrip2 = detector(gray_pic1,gray_pic2)

# showing the images with their key points finded by the detector

cv2.imshow("Key points of Image 1",cv2.drawKeypoints(gray_pic1,key_pt1,None))

cv2.imshow("Key points of Image 2",cv2.drawKeypoints(gray_pic2,key_pt2,None))

# printing descriptors of both of the images

print(f'Descriptors of Image 1 {descrip1}')

print(f'Descriptors of Image 2 {descrip2}')

print('------------------------------')

# printing the Shape of the descriptors

print(f'Shape of descriptor of first image {descrip1.shape}')

print(f'Shape of descriptor of second image {descrip2.shape}')

cv2.waitKey()

cv2.destroyAllWindows()

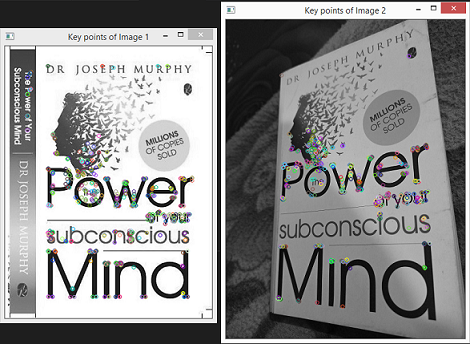

输出:

第一个输出图像显示了两个图像的绘制关键点。

KeyPoints是兴趣点,简单来说就是当人类看到那个时候的图像时他注意到图像中的特征,类似地,当机器读取图像时,它会看到一些兴趣点,称为关键点.

第二个输出图像显示描述符和描述符的形状。

这些描述符基本上是数组或数字箱。这些用于描述特征,使用这些描述符我们可以匹配两个不同的图像。

在第二个输出图像中,我们可以看到第一个图像描述符形状和第二个图像描述符形状分别为 (467, 32) 和 (500,32)。因此,Oriented Fast and Rotated Brief (ORB) 检测器默认尝试在图像中查找 500 个特征,并且对于每个描述符,它将描述 32 个值。

那么,现在我们将如何使用这些描述符呢?我们可以使用Brute Force Matcher (如上文所述)将这些描述符匹配在一起,并找出我们获得了多少相似性。

示例 3:使用 Brute Force Matcher 进行特征匹配。

Python

# importing openCV library

import cv2

# function to read the images by taking there path

def read_image(path1,path2):

read_img1 = cv2.imread(path1)

read_img2 = cv2.imread(path2)

return (read_img1,read_img2)

# function to convert images from RGB to gray scale

def convert_to_grayscale(pic1,pic2):

gray_img1 = cv2.cvtColor(pic1,cv2.COLOR_BGR2GRAY)

gray_img2 = cv2.cvtColor(pic2,cv2.COLOR_BGR2GRAY)

return (gray_img1,gray_img2)

# function to detect the features by finding key points

# and descriptors from the image

def detector(image1,image2):

# creating ORB detector

detect = cv2.ORB_create()

# finding key points and descriptors of both images using

# detectAndCompute() function

key_point1,descrip1 = detect.detectAndCompute(image1,None)

key_point2,descrip2 = detect.detectAndCompute(image2,None)

return (key_point1,descrip1,key_point2,descrip2)

# function to find best detected features using brute force

# matcher and match them according to there humming distance

def BF_FeatureMatcher(des1,des2):

brute_force = cv2.BFMatcher(cv2.NORM_HAMMING,crossCheck=True)

no_of_matches = brute_force.match(des1,des2)

# finding the humming distance of the matches and sorting them

no_of_matches = sorted(no_of_matches,key=lambda x:x.distance)

return no_of_matches

# function displaying the output image with the feature matching

def display_output(pic1,kpt1,pic2,kpt2,best_match):

# drawing the feature matches using drawMatches() function

output_image = cv2.drawMatches(pic1,kpt1,pic2,kpt2,best_match,None,flags=2)

cv2.imshow('Output image',output_image)

# main function

if __name__ == '__main__':

# giving the path of both of the images

first_image_path = 'C:/Users/Python(ds)/1611755129039.jpg'

second_image_path = 'C:/Users/Python(ds)/1611755720390.jpg'

# reading the image from there paths

img1, img2 = read_image(first_image_path,second_image_path)

# converting the readed images into the gray scale images

gray_pic1, gray_pic2 = convert_to_grayscale(img1,img2)

# storing the finded key points and descriptors of both of the images

key_pt1,descrip1,key_pt2,descrip2 = detector(gray_pic1,gray_pic2)

# sorting the number of best matches obtained from brute force matcher

number_of_matches = BF_FeatureMatcher(descrip1,descrip2)

tot_feature_matches = len(number_of_matches)

# printing total number of feature matches found

print(f'Total Number of Features matches found are {tot_feature_matches}')

# after drawing the feature matches displaying the output image

display_output(gray_pic1,key_pt1,gray_pic2,key_pt2,number_of_matches)

cv2.waitKey()

cv2.destroyAllWindows()

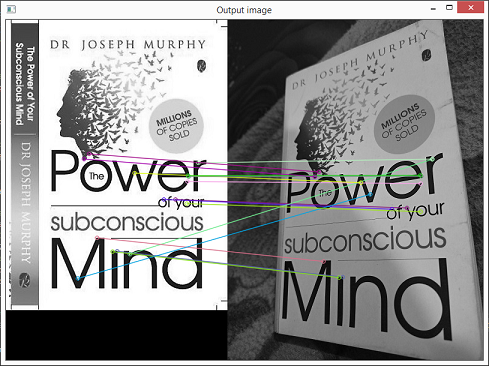

输出:

我们总共获得了 178 个特征匹配。一共抽取了178个匹配,但是按照它们的嗡嗡距离升序排列意味着第178个特征的距离大于第一个特征,所以第一个特征匹配比第178个特征匹配更准确。

看起来很乱,因为所有的 178 个特征匹配都被绘制了,让我们绘制前 15 个特征(为了可见性)。

示例 4:使用 Brute Force Matcher 进行第一个/前十五个特征匹配。

Python

# importing openCV library

import cv2

# function to read the images by taking there path

def read_image(path1,path2):

read_img1 = cv2.imread(path1)

read_img2 = cv2.imread(path2)

return (read_img1,read_img2)

# function to convert images from RGB to gray scale

def convert_to_grayscale(pic1,pic2):

gray_img1 = cv2.cvtColor(pic1,cv2.COLOR_BGR2GRAY)

gray_img2 = cv2.cvtColor(pic2,cv2.COLOR_BGR2GRAY)

return (gray_img1,gray_img2)

# function to detect the features by finding key points

# and descriptors from the image

def detector(image1,image2):

# creating ORB detector

detect = cv2.ORB_create()

# finding key points and descriptors of both images

# using detectAndCompute() function

key_point1,descrip1 = detect.detectAndCompute(image1,None)

key_point2,descrip2 = detect.detectAndCompute(image2,None)

return (key_point1,descrip1,key_point2,descrip2)

# function to find best detected features using

# brute force matcher and match them according to there humming distance

def BF_FeatureMatcher(des1,des2):

brute_force = cv2.BFMatcher(cv2.NORM_HAMMING,crossCheck=True)

no_of_matches = brute_force.match(des1,des2)

# finding the humming distance of the matches and sorting them

no_of_matches = sorted(no_of_matches,key=lambda x:x.distance)

return no_of_matches

# function displaying the output image with the feature matching

def display_output(pic1,kpt1,pic2,kpt2,best_match):

# drawing first fifteen best feature matches using drawMatches() function

output_image = cv2.drawMatches(pic1,kpt1,pic2,

kpt2,best_match[:15],None,flags=2)

cv2.imshow('Output image',output_image)

# main function

if __name__ == '__main__':

# giving the path of both of the images

first_image_path = 'C:/Users/Python(ds)/1611755129039.jpg'

second_image_path = 'C:/Users/Python(ds)/1611755720390.jpg'

# reading the image from there paths

img1, img2 = read_image(first_image_path,second_image_path)

# converting the readed images into the gray scale images

gray_pic1, gray_pic2 = convert_to_grayscale(img1,img2)

# storing the finded key points and descriptors of both of the images

key_pt1,descrip1,key_pt2,descrip2 = detector(gray_pic1,gray_pic2)

# sorting the number of best matches obtained from brute force matcher

number_of_matches = BF_FeatureMatcher(descrip1,descrip2)

# after drawing the feature matches displaying the output image

display_output(gray_pic1,key_pt1,gray_pic2,key_pt2,number_of_matches)

cv2.waitKey()

cv2.destroyAllWindows()

输出:

输出图像显示了使用 Brute Force Matcher 进行的第一个/前十五个最佳特征匹配。

从上面的输出中,我们可以看到这些匹配比所有剩余的特征匹配都更准确。

让我们再举一个特征匹配的例子。

示例 5:使用 Brute Force 进行特征匹配。

Python

# importing openCV library

import cv2

# function to read the images by taking there path

def read_image(path1,path2):

read_img1 = cv2.imread(path1)

read_img2 = cv2.imread(path2)

return (read_img1,read_img2)

# function to convert images from RGB to gray scale

def convert_to_grayscale(pic1,pic2):

gray_img1 = cv2.cvtColor(pic1,cv2.COLOR_BGR2GRAY)

gray_img2 = cv2.cvtColor(pic2,cv2.COLOR_BGR2GRAY)

return (gray_img1,gray_img2)

# function to detect the features by finding key points and

# descriptors from the image

def detector(image1,image2):

# creating ORB detector

detect = cv2.ORB_create()

# finding key points and descriptors of both images

# using detectAndCompute() function

key_point1,descrip1 = detect.detectAndCompute(image1,None)

key_point2,descrip2 = detect.detectAndCompute(image2,None)

return (key_point1,descrip1,key_point2,descrip2)

# function to find best detected features using brute

# force matcher and match them according to there humming distance

def BF_FeatureMatcher(des1,des2):

brute_force = cv2.BFMatcher(cv2.NORM_HAMMING,crossCheck=True)

no_of_matches = brute_force.match(des1,des2)

# finding the humming distance of the matches and sorting them

no_of_matches = sorted(no_of_matches,key=lambda x:x.distance)

return no_of_matches

# function displaying the output image with the feature matching

def display_output(pic1,kpt1,pic2,kpt2,best_match):

# drawing the feature matches using drawMatches() function

output_image = cv2.drawMatches(pic1,kpt1,pic2,kpt2,

best_match[:30],None,flags=2)

cv2.imshow('Output image',output_image)

# main function

if __name__ == '__main__':

# giving the path of both of the images

first_image_path = 'C:/Users/Python(ds)/Titan_1.jpg'

second_image_path = 'C:/Users/Python(ds)/Titan_nor.jpg'

# reading the image from there paths

img1, img2 = read_image(first_image_path,second_image_path)

# converting the readed images into the gray scale images

gray_pic1, gray_pic2 = convert_to_grayscale(img1,img2)

# storing the finded key points and descriptors of both of the images

key_pt1,descrip1,key_pt2,descrip2 = detector(gray_pic1,gray_pic2)

# sorting the number of best matches obtained from brute force matcher

number_of_matches = BF_FeatureMatcher(descrip1,descrip2)

tot_feature_matches = len(number_of_matches)

print(f'Total Number of Features matches found are {tot_feature_matches}')

# after drawing the feature matches displaying the output image

display_output(gray_pic1,key_pt1,gray_pic2,key_pt2,number_of_matches)

cv2.waitKey()

cv2.destroyAllWindows()

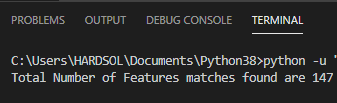

输出:

在上面的例子中,我们总共得到了 147 个最佳特征匹配,我们只绘制了前 30 个匹配,以便我们可以正确地看到匹配。

示例 6:通过获取旋转的火车图像使用 Brute Force Matcher 进行特征匹配。

Python

# importing openCV library

import cv2

# function to read the images by taking there path

def read_image(path1,path2):

read_img1 = cv2.imread(path1)

read_img2 = cv2.imread(path2)

return (read_img1,read_img2)

# function to convert images from RGB to gray scale

def convert_to_grayscale(pic1,pic2):

gray_img1 = cv2.cvtColor(pic1,cv2.COLOR_BGR2GRAY)

gray_img2 = cv2.cvtColor(pic2,cv2.COLOR_BGR2GRAY)

return (gray_img1,gray_img2)

# function to detect the features by finding key points

# and descriptors from the image

def detector(image1,image2):

# creating ORB detector

detect = cv2.ORB_create()

# finding key points and descriptors of both images

# using detectAndCompute() function

key_point1,descrip1 = detect.detectAndCompute(image1,None)

key_point2,descrip2 = detect.detectAndCompute(image2,None)

return (key_point1,descrip1,key_point2,descrip2)

# function to find best detected features using brute

# force matcher and match them according to there humming distance

def BF_FeatureMatcher(des1,des2):

brute_force = cv2.BFMatcher(cv2.NORM_HAMMING,crossCheck=True)

no_of_matches = brute_force.match(des1,des2)

# finding the humming distance of the matches and sorting them

no_of_matches = sorted(no_of_matches,key=lambda x:x.distance)

return no_of_matches

# function displaying the output image with the feature matching

def display_output(pic1,kpt1,pic2,kpt2,best_match):

# drawing the feature matches using drawMatches() function

output_image = cv2.drawMatches(pic1,kpt1,pic2,

kpt2,best_match[:30],None,flags=2)

cv2.imshow('Output image',output_image)

# main function

if __name__ == '__main__':

# giving the path of both of the images

first_image_path = 'C:/Users/Python(ds)/Titan_1.jpg'

second_image_path = 'C:/Users/Python(ds)/Titan_rotated.jpg'

# reading the image from there paths

img1, img2 = read_image(first_image_path,second_image_path)

# converting the readed images into the gray scale images

gray_pic1, gray_pic2 = convert_to_grayscale(img1,img2)

# storing the finded key points and descriptors of both of the images

key_pt1,descrip1,key_pt2,descrip2 = detector(gray_pic1,gray_pic2)

# sorting the number of best matches obtained from brute force matcher

number_of_matches = BF_FeatureMatcher(descrip1,descrip2)

tot_feature_matches = len(number_of_matches)

print(f'Total Number of Features matches found are {tot_feature_matches}')

# after drawing the feature matches displaying the output image

display_output(gray_pic1,key_pt1,gray_pic2,key_pt2,number_of_matches)

cv2.waitKey()

cv2.destroyAllWindows()

输出:

在这个例子中,当我们拍摄旋转的火车图像时,我们发现最佳特征匹配的总数几乎没有差异,即 148。

在第一个输出图像中,我们只绘制了前 30 个最佳特征匹配。