PySpark – 按多列排序

在本文中,我们将了解如何通过Python对 PySpark DataFrames 中的多列进行排序。

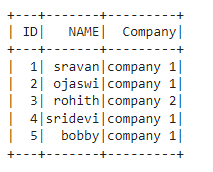

创建用于演示的数据框:

Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of employee data

data = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

# specify column names

columns = ['ID', 'NAME', 'Company']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

dataframe.show()Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of employee data

data = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

# specify column names

columns = ['ID', 'NAME', 'Company']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# orderBy dataframe in asc order

dataframe.orderBy(['Name', 'ID', 'Company'],

ascending=True).show()Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of employee data

data = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

# specify column names

columns = ['ID', 'NAME', 'Company']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# orderBy dataframe in desc order

dataframe.orderBy(['Name', 'ID', 'Company'],

ascending=False).show()Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of employee data

data = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

# specify column names

columns = ['ID', 'NAME', 'Company']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# orderBy dataframe in asc order

dataframe.sort(['Name', 'ID', 'Company'],

ascending=True).show()Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of employee data

data = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

# specify column names

columns = ['ID', 'NAME', 'Company']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# orderBy dataframe in desc order

dataframe.sort(['Name', 'ID', 'Company'],

ascending=False).show()输出:

orderby 表示我们将按多列按升序或降序对数据帧进行排序。我们可以使用以下方法来做到这一点。

方法一:使用 orderBy()

此函数将在对多列进行排序后返回数据框。它将首先根据给定的列名进行排序。

Syntax:

- Ascending order: dataframe.orderBy([‘column1′,’column2′,……,’column n’], ascending=True).show()

- Descending Order: dataframe.orderBy([‘column1′,’column2′,……,’column n’], ascending=False).show()

where:

- dataframe is the Pyspark Input dataframe

- ascending=True specifies to sort the dataframe in ascending order

- ascending=False specifies to sort the dataframe in descending order

示例 1 :使用 orderBy() 按升序对 PySpark 数据帧进行排序。

Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of employee data

data = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

# specify column names

columns = ['ID', 'NAME', 'Company']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# orderBy dataframe in asc order

dataframe.orderBy(['Name', 'ID', 'Company'],

ascending=True).show()

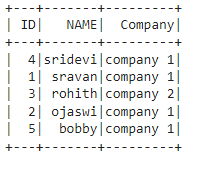

输出:

示例 2:使用 orderBy() 对 PySpark 数据帧进行降序排序。

Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of employee data

data = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

# specify column names

columns = ['ID', 'NAME', 'Company']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# orderBy dataframe in desc order

dataframe.orderBy(['Name', 'ID', 'Company'],

ascending=False).show()

输出:

方法 2:使用 sort()

此函数将在对多列进行排序后返回数据框。它将首先根据给定的列名进行排序。

Syntax:

- Ascending order: dataframe.sort([‘column1′,’column2′,……,’column n’], ascending=True).show()

- Descending Order: dataframe.sort([‘column1′,’column2′,……,’column n’], ascending=False).show()

where,

- dataframe is the Pyspark Input dataframe

- ascending=True specifies to sort the dataframe in ascending order

- ascending=False specifies to sort the dataframe in descending order

示例 1:按升序对 PySpark 数据帧进行排序

Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of employee data

data = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

# specify column names

columns = ['ID', 'NAME', 'Company']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# orderBy dataframe in asc order

dataframe.sort(['Name', 'ID', 'Company'],

ascending=True).show()

输出:

示例 2:按降序对 PySpark 数据帧进行排序

Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of employee data

data = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

# specify column names

columns = ['ID', 'NAME', 'Company']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# orderBy dataframe in desc order

dataframe.sort(['Name', 'ID', 'Company'],

ascending=False).show()

输出: