如何在 PySpark 数据框中找到特定列的总和

在本文中,我们将在Python找到 PySpark 数据框列的总和。我们将使用 agg()函数在列中找到总和。

让我们创建一个示例数据框。

Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of students data

data = [["1", "sravan", "vignan", 67, 89],

["2", "ojaswi", "vvit", 78, 89],

["3", "rohith", "vvit", 100, 80],

["4", "sridevi", "vignan", 78, 80],

["1", "sravan", "vignan", 89, 98],

["5", "gnanesh", "iit", 94, 98]]

# specify column names

columns = ['student ID', 'student NAME', 'college',

'subject 1', 'subject 2']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# display dataframe

dataframe.show()Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of students data

data = [["1", "sravan", "vignan", 67, 89],

["2", "ojaswi", "vvit", 78, 89],

["3", "rohith", "vvit", 100, 80],

["4", "sridevi", "vignan", 78, 80],

["1", "sravan", "vignan", 89, 98],

["5", "gnanesh", "iit", 94, 98]]

# specify column names

columns = ['student ID', 'student NAME', 'college',

'subject 1', 'subject 2']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# find sum of subjects column

dataframe.agg({'subject 1': 'sum'}).show()Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of students data

data = [["1", "sravan", "vignan", 67, 89],

["2", "ojaswi", "vvit", 78, 89],

["3", "rohith", "vvit", 100, 80],

["4", "sridevi", "vignan", 78, 80],

["1", "sravan", "vignan", 89, 98],

["5", "gnanesh", "iit", 94, 98]]

# specify column names

columns = ['student ID', 'student NAME', 'college',

'subject 1', 'subject 2']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# find sum of multiple column

dataframe.agg({'subject 1': 'sum', 'student ID': 'sum',

'subject 2': 'sum'}).show()输出:

使用 agg() 方法:

agg() 方法返回传递的参数列的总和。

句法:

dataframe.agg({'column_name': 'sum'})在哪里,

- 数据框是输入数据框

- column_name是数据框中的列

- sum是返回总和的函数。

示例 1:在数据框列中查找总和的Python程序

蟒蛇3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of students data

data = [["1", "sravan", "vignan", 67, 89],

["2", "ojaswi", "vvit", 78, 89],

["3", "rohith", "vvit", 100, 80],

["4", "sridevi", "vignan", 78, 80],

["1", "sravan", "vignan", 89, 98],

["5", "gnanesh", "iit", 94, 98]]

# specify column names

columns = ['student ID', 'student NAME', 'college',

'subject 1', 'subject 2']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# find sum of subjects column

dataframe.agg({'subject 1': 'sum'}).show()

输出:

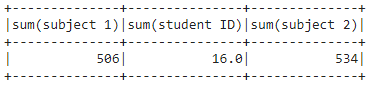

示例 2:从多列获取总和值

蟒蛇3

# importing module

import pyspark

# importing sparksession from pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of students data

data = [["1", "sravan", "vignan", 67, 89],

["2", "ojaswi", "vvit", 78, 89],

["3", "rohith", "vvit", 100, 80],

["4", "sridevi", "vignan", 78, 80],

["1", "sravan", "vignan", 89, 98],

["5", "gnanesh", "iit", 94, 98]]

# specify column names

columns = ['student ID', 'student NAME', 'college',

'subject 1', 'subject 2']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

# find sum of multiple column

dataframe.agg({'subject 1': 'sum', 'student ID': 'sum',

'subject 2': 'sum'}).show()

输出: