从字典创建 PySpark 数据框

在本文中,我们将讨论从字典创建 Pyspark 数据框。为此,使用 spark.createDataFrame() 方法。此方法采用两个参数数据和列。 data 属性将包含数据框,而 columns 属性将包含列名称列表。

示例 1:用于创建学生地址详细信息并将其转换为数据框的Python代码

Python3

# importing module

import pyspark

# importing sparksession from

# pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving

# an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of college data with dictionary

data = [{'student_id': 12, 'name': 'sravan',

'address': 'kakumanu'}]

# creating a dataframe

dataframe = spark.createDataFrame(data)

# show data frame

dataframe.show()Python3

# importing module

import pyspark

# importing sparksession from

# pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving

# an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of college data with dictionary

# with three dictionaries

data = [{'student_id': 12, 'name': 'sravan', 'address': 'kakumanu'},

{'student_id': 14, 'name': 'jyothika', 'address': 'tenali'},

{'student_id': 11, 'name': 'deepika', 'address': 'repalle'}]

# creating a dataframe

dataframe = spark.createDataFrame(data)

# show data frame

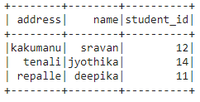

dataframe.show()输出:

Example2:创建三个字典,并将它们传递给pyspark中的数据框

蟒蛇3

# importing module

import pyspark

# importing sparksession from

# pyspark.sql module

from pyspark.sql import SparkSession

# creating sparksession and giving

# an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of college data with dictionary

# with three dictionaries

data = [{'student_id': 12, 'name': 'sravan', 'address': 'kakumanu'},

{'student_id': 14, 'name': 'jyothika', 'address': 'tenali'},

{'student_id': 11, 'name': 'deepika', 'address': 'repalle'}]

# creating a dataframe

dataframe = spark.createDataFrame(data)

# show data frame

dataframe.show()

输出: