将Python字典列表转换为 PySpark DataFrame

在本文中,我们将讨论如何将Python Dictionary List 转换为 Pyspark DataFrame。

可以通过以下方式完成:

- 使用推断模式。

- 使用显式模式

- 使用 SQL 表达式

方法一:从字典中推断模式

我们将字典直接传递给 createDataFrame() 方法。

Syntax: spark.createDataFrame(data)

示例:使用此方法从字典列表创建 pyspark 数据框的Python代码

Python3

# import the modules

from pyspark.sql import SparkSession

# Create Spark session app name

# is GFG and master name is local

spark = SparkSession.builder.appName("GFG").master("local") .getOrCreate()

# dictionary list of college data

data = [{"Name": 'sravan kumar',

"ID": 1,

"Percentage": 94.29},

{"Name": 'sravani',

"ID": 2,

"Percentage": 84.29},

{"Name": 'kumar',

"ID": 3,

"Percentage": 94.29}

]

# Create data frame from dictionary list

df = spark.createDataFrame(data)

# display

df.show()Python3

# import the modules

from pyspark.sql import SparkSession

from pyspark.sql.types import StructField, StructType,

StringType, IntegerType, FloatType

# Create Spark session app name is

# GFG and master name is local

spark = SparkSession.builder.appName("GFG").master("local") .getOrCreate()

# dictionary list of college data

data = [{"Name": 'sravan kumar',

"ID": 1,

"Percentage": 94.29},

{"Name": 'sravani',

"ID": 2,

"Percentage": 84.29},

{"Name": 'kumar',

"ID": 3,

"Percentage": 94.29}

]

# specify the schema

schema = StructType([

StructField('Name', StringType(), False),

StructField('ID', IntegerType(), False),

StructField('Percentage', FloatType(), True)

])

# Create data frame from

# dictionary list through the schema

df = spark.createDataFrame(data, schema)

# display

df.show()Python3

# import the modules

from pyspark.sql import SparkSession, Row

# Create Spark session app name

# is GFG and master name is local

spark = SparkSession.builder.appName("GFG").master("local") .getOrCreate()

# dictionary list of college data

data = [{"Name": 'sravan kumar',

"ID": 1,

"Percentage": 94.29},

{"Name": 'sravani',

"ID": 2,

"Percentage": 84.29},

{"Name": 'kumar',

"ID": 3,

"Percentage": 94.29}

]

# create dataframe using sql expression

dataframe = spark.createDataFrame([Row(**variable)

for variable in data])

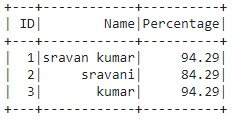

dataframe.show()输出:

方法 2:使用显式架构

在这里,我们将创建一个架构并将架构与数据一起传递给 createdataframe() 方法。

架构结构:

schema = StructType([

StructField(‘column_1’, DataType(), False),

StructField(‘column_2’, DataType(), False)])

其中列是要在 pyspark 数据框中获取的字典列的名称,而 Datatype 是特定列的数据类型。

Syntax: spark.createDataFrame(data, schema)

Where,

- data is the dictionary list

- schema is the schema of the dataframe

使用此方法从字典列表创建 pyspark 数据框的Python程序。

蟒蛇3

# import the modules

from pyspark.sql import SparkSession

from pyspark.sql.types import StructField, StructType,

StringType, IntegerType, FloatType

# Create Spark session app name is

# GFG and master name is local

spark = SparkSession.builder.appName("GFG").master("local") .getOrCreate()

# dictionary list of college data

data = [{"Name": 'sravan kumar',

"ID": 1,

"Percentage": 94.29},

{"Name": 'sravani',

"ID": 2,

"Percentage": 84.29},

{"Name": 'kumar',

"ID": 3,

"Percentage": 94.29}

]

# specify the schema

schema = StructType([

StructField('Name', StringType(), False),

StructField('ID', IntegerType(), False),

StructField('Percentage', FloatType(), True)

])

# Create data frame from

# dictionary list through the schema

df = spark.createDataFrame(data, schema)

# display

df.show()

输出:

方法 3:使用 SQL 表达式

这里我们使用 Row函数将Python字典列表转换为 pyspark 数据帧。

Syntax: spark.createDataFrame([Row(**iterator) for iterator in data])

where:

- createDataFrame() is the method to create the dataframe

- Row(**iterator) to iterate the dictionary list.

- data is the dictionary list

将字典列表转换为 pyspark 数据框的Python代码。

蟒蛇3

# import the modules

from pyspark.sql import SparkSession, Row

# Create Spark session app name

# is GFG and master name is local

spark = SparkSession.builder.appName("GFG").master("local") .getOrCreate()

# dictionary list of college data

data = [{"Name": 'sravan kumar',

"ID": 1,

"Percentage": 94.29},

{"Name": 'sravani',

"ID": 2,

"Percentage": 84.29},

{"Name": 'kumar',

"ID": 3,

"Percentage": 94.29}

]

# create dataframe using sql expression

dataframe = spark.createDataFrame([Row(**variable)

for variable in data])

dataframe.show()

输出: