如何使用Python获取每日新闻

在本文中,我们将了解如何使用Python获取每日新闻。这里我们将使用 Beautiful Soup 和 request 模块来抓取数据。

需要的模块

- bs4 : Beautiful Soup(bs4) 是一个Python库,用于从 HTML 和 XML 文件中提取数据。这个模块没有内置于Python。要安装此类型,请在终端中输入以下命令。

pip install bs4- requests : Request 允许您非常轻松地发送 HTTP/1.1 请求。这个模块也没有内置于Python。要安装此类型,请在终端中输入以下命令。

pip install requests分步实施:

步骤1:首先,确保导入这些库。

Python3

import requests

from bs4 import BeautifulSoupPython3

url='https://www.bbc.com/news'

response = requests.get(url)Python3

soup = BeautifulSoup(response.text, 'html.parser')

headlines = soup.find('body').find_all('h3')

for x in headlines:

print(x.text.strip())Python3

import requests

from bs4 import BeautifulSoup

url = 'https://www.bbc.com/news'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

headlines = soup.find('body').find_all('h3')

for x in headlines:

print(x.text.strip())Python3

import requests

from bs4 import BeautifulSoup

url = 'https://www.bbc.com/news'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

headlines = soup.find('body').find_all('h3')

unwanted = ['BBC World News TV', 'BBC World Service Radio',

'News daily newsletter', 'Mobile app', 'Get in touch']

for x in list(dict.fromkeys(headlines)):

if x.text.strip() not in unwanted:

print(x.text.strip())第2步:然后要获取https://www.bbc.com/news的HTML内容,添加以下两行代码:

蟒蛇3

url='https://www.bbc.com/news'

response = requests.get(url)

第 3 步:获取特定的 HTML 标签

为了找到包含新闻标题的 HTML 标签,请访问 https://www.bbc.com/news 并通过右键单击新闻标题并单击“检查”来检查新闻标题:

您将看到所有标题都包含在“

”标签中。因此,要抓取此网页中的所有“”标签,请将以下代码行添加到您的脚本中:

首先,我们将“soup”定义为 BBC 新闻网页的 HTML 内容。接下来,我们将“headlines”定义为网页中所有“

”标签的数组。最后,脚本遍历“headlines”数组并逐一显示其所有内容,去除其外部HTML的每个元素并使用“text.strip()”方法仅显示其文本内容。蟒蛇3

soup = BeautifulSoup(response.text, 'html.parser')

headlines = soup.find('body').find_all('h3')

for x in headlines:

print(x.text.strip())

下面是实现:

蟒蛇3

import requests

from bs4 import BeautifulSoup

url = 'https://www.bbc.com/news'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

headlines = soup.find('body').find_all('h3')

for x in headlines:

print(x.text.strip())

蟒蛇3

soup = BeautifulSoup(response.text, 'html.parser')

headlines = soup.find('body').find_all('h3')

for x in headlines:

print(x.text.strip())

蟒蛇3

import requests

from bs4 import BeautifulSoup

url = 'https://www.bbc.com/news'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

headlines = soup.find('body').find_all('h3')

for x in headlines:

print(x.text.strip())

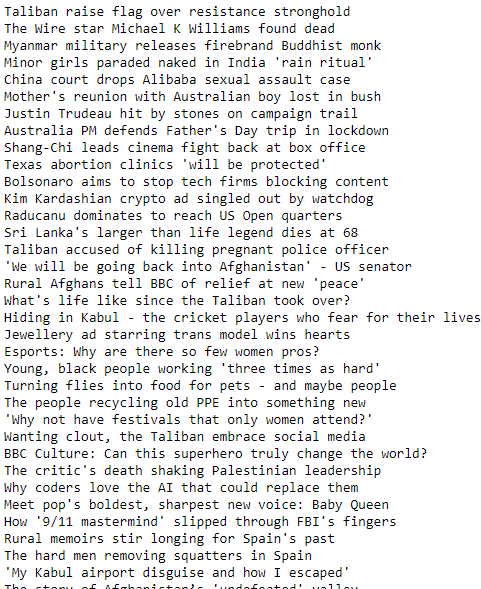

输出:

清理数据

您可能已经注意到,您的输出包含重复的新闻标题和不是新闻标题的文本内容。

创建要删除的所有文本元素的列表:

unwanted = [‘BBC World News TV’, ‘BBC World Service Radio’, ‘News daily newsletter’, ‘Mobile app’, ‘Get in touch’]

然后仅当文本元素不在此列表中时才打印文本元素:

print(x.text.strip())下面是实现:

蟒蛇3

import requests

from bs4 import BeautifulSoup

url = 'https://www.bbc.com/news'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

headlines = soup.find('body').find_all('h3')

unwanted = ['BBC World News TV', 'BBC World Service Radio',

'News daily newsletter', 'Mobile app', 'Get in touch']

for x in list(dict.fromkeys(headlines)):

if x.text.strip() not in unwanted:

print(x.text.strip())

输出: