来自 Scrapy Python站点的电子邮件 ID 提取器项目

Scrapy 是用Python编写的开源网络爬虫框架,用于网络爬虫,也可用于提取通用数据。首先,所有子页面链接都从主页中获取,然后使用正则表达式从这些子页面中抓取电子邮件 ID。

本文展示了从 geeksforgeeks 站点提取的电子邮件 ID 作为参考。

如何使用 Scrapy 创建电子邮件 ID 提取器项目?

1.安装包- 从终端运行以下命令

pip install scrapy

pip install scrapy-selenium2.创建项目——

scrapy startproject projectname (Here projectname is geeksemailtrack)

cd projectname

scrapy genspider spidername (Here spidername is emails)3)在settings.py文件中添加代码使用scrapy-selenium

from shutil import which

SELENIUM_DRIVER_NAME = 'chrome'

SELENIUM_DRIVER_EXECUTABLE_PATH = which('chromedriver')

SELENIUM_DRIVER_ARGUMENTS=[]

DOWNLOADER_MIDDLEWARES = {

'scrapy_selenium.SeleniumMiddleware': 800

}4) 现在为您的 chrome 下载 chrome 驱动程序并将其放在您的 chrome scrapy.cfg 文件附近。要下载 chrome 驱动程序,请参阅此站点 - 下载 chrome 驱动程序。

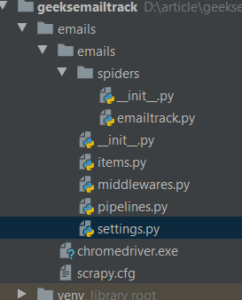

目录结构——

分步代码 -

1. 导入所有需要的库——

Python3

# web scraping framework

import scrapy

# for regular expression

import re

# for selenium request

from scrapy_selenium import SeleniumRequest

# for link extraction

from scrapy.linkextractors.lxmlhtml import LxmlLinkExtractorPython3

def start_requests(self):

yield SeleniumRequest(

url="https://www.geeksforgeeks.org/",

wait_time=3,

screenshot=True,

callback=self.parse,

dont_filter=True

)Python3

def parse(self, response):

# this helps to get all links from source code

links = LxmlLinkExtractor(allow=()).extract_links(response)

# Finallinks contains links urk

Finallinks = [str(link.url) for link in links]

# links list for url that may have email ids

links = []

# filtering and storing only needed url in links list

# pages that are about us and contact us are the ones that have email ids

for link in Finallinks:

if ('Contact' in link or 'contact' in link or 'About' in link or 'about' in link or 'CONTACT' in link or 'ABOUT' in link):

links.append(link)

# current page url also added because few sites have email ids on there main page

links.append(str(response.url))

# parse_link function is called for extracting email ids

l = links[0]

links.pop(0)

# meta helps to transfer links list from parse to parse_link

yield SeleniumRequest(

url=l,

wait_time=3,

screenshot=True,

callback=self.parse_link,

dont_filter=True,

meta={'links': links}

)Python3

def parse_link(self, response):

# response.meta['links'] this helps to get links list

links = response.meta['links']

flag = 0

# links that contains following bad words are discarded

bad_words = ['facebook', 'instagram', 'youtube', 'twitter', 'wiki', 'linkedin']

for word in bad_words:

# if any bad word is found in the current page url

# flag is assigned to 1

if word in str(response.url):

flag = 1

break

# if flag is 1 then no need to get email from

# that url/page

if (flag != 1):

html_text = str(response.text)

# regular expression used for email id

email_list = re.findall('\w+@\w+\.{1}\w+', html_text)

# set of email_list to get unique

email_list = set(email_list)

if (len(email_list) != 0):

for i in email_list:

# adding email ids to final uniqueemail

self.uniqueemail.add(i)

# parse_link function is called till

# if condition satisfy

# else move to parsed function

if (len(links) > 0):

l = links[0]

links.pop(0)

yield SeleniumRequest(

url=l,

callback=self.parse_link,

dont_filter=True,

meta={'links': links}

)

else:

yield SeleniumRequest(

url=response.url,

callback=self.parsed,

dont_filter=True

)Python3

def parsed(self, response):

# emails list of uniqueemail set

emails = list(self.uniqueemail)

finalemail = []

for email in emails:

# avoid garbage value by using '.in' and '.com'

# and append email ids to finalemail

if ('.in' in email or '.com' in email or 'info' in email or 'org' in email):

finalemail.append(email)

# final unique email ids from geeksforgeeks site

print('\n'*2)

print("Emails scraped", finalemail)

print('\n'*2)Python

# web scraping framework

import scrapy

# for regular expression

import re

# for selenium request

from scrapy_selenium import SeleniumRequest

# for link extraction

from scrapy.linkextractors.lxmlhtml import LxmlLinkExtractor

class EmailtrackSpider(scrapy.Spider):

# name of spider

name = 'emailtrack'

# to have unique email ids

uniqueemail = set()

# start_requests sends request to given https://www.geeksforgeeks.org/

# and parse function is called

def start_requests(self):

yield SeleniumRequest(

url="https://www.geeksforgeeks.org/",

wait_time=3,

screenshot=True,

callback=self.parse,

dont_filter=True

)

def parse(self, response):

# this helps to get all links from source code

links = LxmlLinkExtractor(allow=()).extract_links(response)

# Finallinks contains links urk

Finallinks = [str(link.url) for link in links]

# links list for url that may have email ids

links = []

# filtering and storing only needed url in links list

# pages that are about us and contact us are the ones that have email ids

for link in Finallinks:

if ('Contact' in link or 'contact' in link or 'About' in link or 'about' in link or 'CONTACT' in link or 'ABOUT' in link):

links.append(link)

# current page url also added because few sites have email ids on there main page

links.append(str(response.url))

# parse_link function is called for extracting email ids

l = links[0]

links.pop(0)

# meta helps to transfer links list from parse to parse_link

yield SeleniumRequest(

url=l,

wait_time=3,

screenshot=True,

callback=self.parse_link,

dont_filter=True,

meta={'links': links}

)

def parse_link(self, response):

# response.meta['links'] this helps to get links list

links = response.meta['links']

flag = 0

# links that contains following bad words are discarded

bad_words = ['facebook', 'instagram', 'youtube', 'twitter', 'wiki', 'linkedin']

for word in bad_words:

# if any bad word is found in the current page url

# flag is assigned to 1

if word in str(response.url):

flag = 1

break

# if flag is 1 then no need to get email from

# that url/page

if (flag != 1):

html_text = str(response.text)

# regular expression used for email id

email_list = re.findall('\w+@\w+\.{1}\w+', html_text)

# set of email_list to get unique

email_list = set(email_list)

if (len(email_list) != 0):

for i in email_list:

# adding email ids to final uniqueemail

self.uniqueemail.add(i)

# parse_link function is called till

# if condition satisfy

# else move to parsed function

if (len(links) > 0):

l = links[0]

links.pop(0)

yield SeleniumRequest(

url=l,

callback=self.parse_link,

dont_filter=True,

meta={'links': links}

)

else:

yield SeleniumRequest(

url=response.url,

callback=self.parsed,

dont_filter=True

)

def parsed(self, response):

# emails list of uniqueemail set

emails = list(self.uniqueemail)

finalemail = []

for email in emails:

# avoid garbage value by using '.in' and '.com'

# and append email ids to finalemail

if ('.in' in email or '.com' in email or 'info' in email or 'org' in email):

finalemail.append(email)

# final unique email ids from geeksforgeeks site

print('\n'*2)

print("Emails scraped", finalemail)

print('\n'*2) 2. 创建start_requests函数以从selenium访问站点。您可以添加自己的网址。

蟒蛇3

def start_requests(self):

yield SeleniumRequest(

url="https://www.geeksforgeeks.org/",

wait_time=3,

screenshot=True,

callback=self.parse,

dont_filter=True

)

3. 创建解析函数:

蟒蛇3

def parse(self, response):

# this helps to get all links from source code

links = LxmlLinkExtractor(allow=()).extract_links(response)

# Finallinks contains links urk

Finallinks = [str(link.url) for link in links]

# links list for url that may have email ids

links = []

# filtering and storing only needed url in links list

# pages that are about us and contact us are the ones that have email ids

for link in Finallinks:

if ('Contact' in link or 'contact' in link or 'About' in link or 'about' in link or 'CONTACT' in link or 'ABOUT' in link):

links.append(link)

# current page url also added because few sites have email ids on there main page

links.append(str(response.url))

# parse_link function is called for extracting email ids

l = links[0]

links.pop(0)

# meta helps to transfer links list from parse to parse_link

yield SeleniumRequest(

url=l,

wait_time=3,

screenshot=True,

callback=self.parse_link,

dont_filter=True,

meta={'links': links}

)

解析函数说明——

- 在以下几行中,所有链接均从 https://www.geeksforgeeks.org/ 响应中提取。

links = LxmlLinkExtractor(allow=()).extract_links(response)

Finallinks = [str(link.url) for link in links] - Finallinks 是包含所有链接的列表。

- 为了避免不必要的链接,我们设置了过滤器,如果链接属于联系人和关于页面,那么我们只会从该页面抓取详细信息。

for link in Finallinks:

if ('Contact' in link or 'contact' in link or 'About' in link or 'about' in link or

or 'CONTACT' in link or 'ABOUT' in

link):

links.append(link) - 上面的过滤器不是必需的,但站点确实有很多标签(链接),因此,如果站点中有 50 个子页面,那么它将从这 50 个子 URL 中提取电子邮件。假设电子邮件主要在主页、联系页面和关于页面上,因此此过滤器有助于减少抓取那些可能没有电子邮件 ID 的 URL 的时间浪费。

- 可能有电子邮件 id 的页面链接被一一请求,并使用正则表达式抓取电子邮件 id。

4.创建parse_link函数代码:

蟒蛇3

def parse_link(self, response):

# response.meta['links'] this helps to get links list

links = response.meta['links']

flag = 0

# links that contains following bad words are discarded

bad_words = ['facebook', 'instagram', 'youtube', 'twitter', 'wiki', 'linkedin']

for word in bad_words:

# if any bad word is found in the current page url

# flag is assigned to 1

if word in str(response.url):

flag = 1

break

# if flag is 1 then no need to get email from

# that url/page

if (flag != 1):

html_text = str(response.text)

# regular expression used for email id

email_list = re.findall('\w+@\w+\.{1}\w+', html_text)

# set of email_list to get unique

email_list = set(email_list)

if (len(email_list) != 0):

for i in email_list:

# adding email ids to final uniqueemail

self.uniqueemail.add(i)

# parse_link function is called till

# if condition satisfy

# else move to parsed function

if (len(links) > 0):

l = links[0]

links.pop(0)

yield SeleniumRequest(

url=l,

callback=self.parse_link,

dont_filter=True,

meta={'links': links}

)

else:

yield SeleniumRequest(

url=response.url,

callback=self.parsed,

dont_filter=True

)

parse_link函数说明:

通过response.text我们得到所请求 URL 的所有源代码。此处使用的正则表达式 '\w+@\w+\.{1}\w+' 可以翻译为类似这样的内容 查找以一个或多个字母开头的每一段字符串,后跟一个 at 符号 ('@') , 后跟一个或多个以点结尾的字母。

之后它应该再次有一个或多个字母。它是用于获取电子邮件 ID 的正则表达式。

5. 创建解析函数–

蟒蛇3

def parsed(self, response):

# emails list of uniqueemail set

emails = list(self.uniqueemail)

finalemail = []

for email in emails:

# avoid garbage value by using '.in' and '.com'

# and append email ids to finalemail

if ('.in' in email or '.com' in email or 'info' in email or 'org' in email):

finalemail.append(email)

# final unique email ids from geeksforgeeks site

print('\n'*2)

print("Emails scraped", finalemail)

print('\n'*2)

解析函数的说明:

上面的正则表达式也导致了垃圾值如 select@1.13 在这个从 geeksforgeeks 抓取的电子邮件 ID 中,我们知道 select@1.13 不是电子邮件 ID。解析函数过滤器应用过滤器,只接收包含“.com”和“.in”的电子邮件。

使用以下命令运行蜘蛛 -

scrape crawl spidername (spidername is name of spider)垃圾邮件中的垃圾值:

最终抓取的电子邮件:

Python

# web scraping framework

import scrapy

# for regular expression

import re

# for selenium request

from scrapy_selenium import SeleniumRequest

# for link extraction

from scrapy.linkextractors.lxmlhtml import LxmlLinkExtractor

class EmailtrackSpider(scrapy.Spider):

# name of spider

name = 'emailtrack'

# to have unique email ids

uniqueemail = set()

# start_requests sends request to given https://www.geeksforgeeks.org/

# and parse function is called

def start_requests(self):

yield SeleniumRequest(

url="https://www.geeksforgeeks.org/",

wait_time=3,

screenshot=True,

callback=self.parse,

dont_filter=True

)

def parse(self, response):

# this helps to get all links from source code

links = LxmlLinkExtractor(allow=()).extract_links(response)

# Finallinks contains links urk

Finallinks = [str(link.url) for link in links]

# links list for url that may have email ids

links = []

# filtering and storing only needed url in links list

# pages that are about us and contact us are the ones that have email ids

for link in Finallinks:

if ('Contact' in link or 'contact' in link or 'About' in link or 'about' in link or 'CONTACT' in link or 'ABOUT' in link):

links.append(link)

# current page url also added because few sites have email ids on there main page

links.append(str(response.url))

# parse_link function is called for extracting email ids

l = links[0]

links.pop(0)

# meta helps to transfer links list from parse to parse_link

yield SeleniumRequest(

url=l,

wait_time=3,

screenshot=True,

callback=self.parse_link,

dont_filter=True,

meta={'links': links}

)

def parse_link(self, response):

# response.meta['links'] this helps to get links list

links = response.meta['links']

flag = 0

# links that contains following bad words are discarded

bad_words = ['facebook', 'instagram', 'youtube', 'twitter', 'wiki', 'linkedin']

for word in bad_words:

# if any bad word is found in the current page url

# flag is assigned to 1

if word in str(response.url):

flag = 1

break

# if flag is 1 then no need to get email from

# that url/page

if (flag != 1):

html_text = str(response.text)

# regular expression used for email id

email_list = re.findall('\w+@\w+\.{1}\w+', html_text)

# set of email_list to get unique

email_list = set(email_list)

if (len(email_list) != 0):

for i in email_list:

# adding email ids to final uniqueemail

self.uniqueemail.add(i)

# parse_link function is called till

# if condition satisfy

# else move to parsed function

if (len(links) > 0):

l = links[0]

links.pop(0)

yield SeleniumRequest(

url=l,

callback=self.parse_link,

dont_filter=True,

meta={'links': links}

)

else:

yield SeleniumRequest(

url=response.url,

callback=self.parsed,

dont_filter=True

)

def parsed(self, response):

# emails list of uniqueemail set

emails = list(self.uniqueemail)

finalemail = []

for email in emails:

# avoid garbage value by using '.in' and '.com'

# and append email ids to finalemail

if ('.in' in email or '.com' in email or 'info' in email or 'org' in email):

finalemail.append(email)

# final unique email ids from geeksforgeeks site

print('\n'*2)

print("Emails scraped", finalemail)

print('\n'*2)

以上代码的工作视频 -

参考 -链接提取器