Python中的不平衡学习模块

Imbalanced-Learn 是一个Python模块,有助于平衡高度偏向或偏向某些类的数据集。因此,它有助于对过采样或未解采样的类进行重新采样。如果不平衡比率更大,则输出会偏向具有更多示例数量的类别。需要安装以下依赖项才能使用不平衡学习:

- scipy(>=0.19.1)

- numpy(>=1.13.3)

- scikit-learn(>=0.23)

- 作业库(>=0.11)

- keras 2(可选)

- 张量流(可选)

要安装不平衡学习,只需输入:

pip install imbalanced-learn数据的重采样分为两部分:

Estimator:它实现了一种源自scikit-learn的 fit 方法。数据和目标都是二维数组的形式

estimator = obj.fit(data, targets)重采样器: fit_resample方法将数据和目标重采样到具有data_resampled和targets_resampled键值对的字典中。

data_resampled, targets_resampled = obj.fit_resample(data, targets)不平衡学习模块具有不同的过采样和欠采样算法:

我们将使用名为make_classification数据集的内置数据集,它返回

- x: n_samples*n_features 的矩阵和

- y:整数标签数组。

单击数据集以获取使用的数据集。

Python3

# import required modules

from sklearn.datasets import make_classification

# define dataset

x, y = make_classification(n_samples=10000,

weights=[0.99],

flip_y=0)

print('x:\n', X)

print('y:\n', y)Python3

# import required modules

from sklearn.datasets import make_classification

from imblearn.over_sampling import RandomOverSampler

# define dataset

x, y = make_classification(n_samples=10000,

weights=[0.99],

flip_y=0)

oversample = RandomOverSampler(sampling_strategy='minority')

x_over, y_over = oversample.fit_resample(x, y)

# print the features and the labels

print('x_over:\n', x_over)

print('y_over:\n', y_over)Python3

# import required modules

from sklearn.datasets import make_classification

from imblearn.over_sampling import SMOTE

# define dataset

x, y = make_classification(n_samples=10000, weights=[0.99], flip_y=0)

smote = SMOTE()

x_smote, y_smote = smote.fit_resample(x, y)

# print the features and the labels

print('x_smote:\n', x_smote)

print('y_smote:\n', y_smote)Python3

# import required modules

from sklearn.datasets import make_classification

from imblearn.under_sampling import EditedNearestNeighbours

# define dataset

x, y = make_classification(n_samples=10000, weights=[0.99], flip_y=0)

en = EditedNearestNeighbours()

x_en, y_en = en.fit_resample(x, y)

# print the features and the labels

print('x_en:\n', x_en)

print('y_en:\n', y_en)Python3

# import required modules

from sklearn.datasets import make_classification

from imblearn.under_sampling import RandomUnderSampler

# define dataset

x, y = make_classification(n_samples=10000,

weights=[0.99],

flip_y=0)

undersample = RandomUnderSampler()

x_under, y_under = undersample.fit_resample(x, y)

# print the features and the labels

print('x_under:\n', x_under)

print('y_under:\n', y_under)输出:

下面是一些描述如何对数据集应用过采样和欠采样的程序:

过采样

- 随机过采样器:这是一种简单的方法,生成样本较少的类并随机重新采样。

句法:

from imblearn.over_sampling import RandomOverSampler

Parameters(optional): sampling_strategy=’auto’, return_indices=False, random_state=None, ratio=None

Implementation:

oversample = RandomOverSampler(sampling_strategy=’minority’)

X_oversample,Y_oversample=oversample.fit_resample(X,Y)

Return Type:a matrix with the shape of n_samples*n_features

例子:

蟒蛇3

# import required modules

from sklearn.datasets import make_classification

from imblearn.over_sampling import RandomOverSampler

# define dataset

x, y = make_classification(n_samples=10000,

weights=[0.99],

flip_y=0)

oversample = RandomOverSampler(sampling_strategy='minority')

x_over, y_over = oversample.fit_resample(x, y)

# print the features and the labels

print('x_over:\n', x_over)

print('y_over:\n', y_over)

输出:

- SMOTE、ADASYN:合成少数过采样技术 (SMOTE) 和自适应合成 (ADASYN) 是过采样中使用的 2 种方法。这些也会生成低样本,但 ADASYN 考虑了分布密度以均匀分布数据点。

句法:

from imblearn.over_sampling import SMOTE, ADASYN

Parameters(optional):*, sampling_strategy=’auto’, random_state=None, n_neighbors=5, n_jobs=None

Implementation:

smote = SMOTE(ratio=’minority’)

X_smote,Y_smote=smote.fit_resample(X,Y)

Return Type:a matrix with the shape of n_samples*n_features

例子:

蟒蛇3

# import required modules

from sklearn.datasets import make_classification

from imblearn.over_sampling import SMOTE

# define dataset

x, y = make_classification(n_samples=10000, weights=[0.99], flip_y=0)

smote = SMOTE()

x_smote, y_smote = smote.fit_resample(x, y)

# print the features and the labels

print('x_smote:\n', x_smote)

print('y_smote:\n', y_smote)

输出:

欠采样

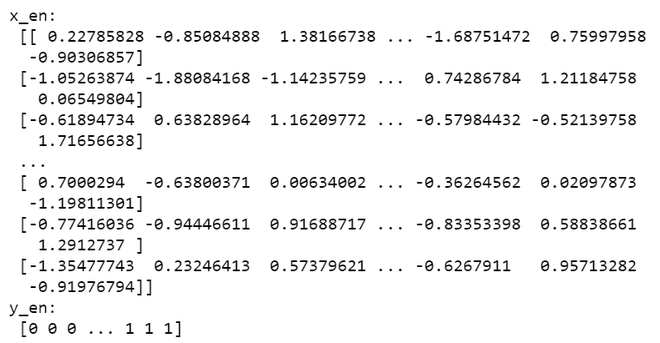

- Edited Nearest Neighbours:该算法删除任何标签与其相邻类不同的样本。

句法:

from imblearn.under_sampling import EditedNearestNeighbours

Parameters(optional): sampling_strategy=’auto’, return_indices=False, random_state=None, n_neighbors=3, kind_sel=’all’, n_jobs=1, ratio=None

Implementation:

en = EditedNearestNeighbours()

X_en,Y_en=en.fit_resample(X, y)

Return Type:a matrix with the shape of n_samples*n_features

例子:

蟒蛇3

# import required modules

from sklearn.datasets import make_classification

from imblearn.under_sampling import EditedNearestNeighbours

# define dataset

x, y = make_classification(n_samples=10000, weights=[0.99], flip_y=0)

en = EditedNearestNeighbours()

x_en, y_en = en.fit_resample(x, y)

# print the features and the labels

print('x_en:\n', x_en)

print('y_en:\n', y_en)

输出:

- 随机采样器:它涉及对任何随机类进行采样,有或没有任何替换。

句法:

from imblearn.under_sampling import RandomUnderSampler

Parameters(optional): sampling_strategy=’auto’, return_indices=False, random_state=None, replacement=False, ratio=None

Implementation:

undersample = RandomUnderSampler()

X_under, y_under = undersample.fit_resample(X, y)

Return Type: a matrix with the shape of n_samples*n_features

例子:

蟒蛇3

# import required modules

from sklearn.datasets import make_classification

from imblearn.under_sampling import RandomUnderSampler

# define dataset

x, y = make_classification(n_samples=10000,

weights=[0.99],

flip_y=0)

undersample = RandomUnderSampler()

x_under, y_under = undersample.fit_resample(x, y)

# print the features and the labels

print('x_under:\n', x_under)

print('y_under:\n', y_under)

输出: