根据多个条件删除 PySpark 数据框中的行

在本文中,我们将了解如何基于多个条件删除 PySpark 数据帧中的行。

方法一:使用逻辑表达式

这里我们将使用逻辑表达式来过滤行。 Filter()函数用于根据给定的条件或 SQL 表达式从 RDD/DataFrame 中过滤行。

Syntax: filter( condition)

Parameters:

- Condition: Logical condition or SQL expression

示例 1:

Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql

# module

from pyspark.sql import SparkSession

# spark library import

import pyspark.sql.functions

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of students data

data = [["1", "Amit", " DU"],

["2", "Mohit", "DU"],

["3", "rohith", "BHU"],

["4", "sridevi", "LPU"],

["1", "sravan", "KLMP"],

["5", "gnanesh", "IIT"]]

# specify column names

columns = ['student_ID', 'student_NAME', 'college']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

dataframe = dataframe.filter(dataframe.college != "IIT")

dataframe.show()Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql

# module

from pyspark.sql import SparkSession

# spark library import

import pyspark.sql.functions

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of students data

data = [["1", "Amit", " DU"],

["2", "Mohit", "DU"],

["3", "rohith", "BHU"],

["4", "sridevi", "LPU"],

["1", "sravan", "KLMP"],

["5", "gnanesh", "IIT"]]

# specify column names

columns = ['student_ID', 'student_NAME', 'college']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

dataframe = dataframe.filter(

((dataframe.college != "DU")

& (dataframe.student_ID != "3"))

)

dataframe.show()Python3

# importing module

import pyspark

# importing sparksession from pyspark.sql

# module

from pyspark.sql import SparkSession

# spark library import

import pyspark.sql.functions

# spark library import

from pyspark.sql.functions import when

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of students data

data = [["1", "Amit", " DU"],

["2", "Mohit", "DU"],

["3", "rohith", "BHU"],

["4", "sridevi", "LPU"],

["1", "sravan", "KLMP"],

["5", "gnanesh", "IIT"]]

# specify column names

columns = ['student_ID', 'student_NAME', 'college']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

dataframe.withColumn('New_col',

when(dataframe.student_ID != '5', "True")

.when(dataframe.student_NAME != 'gnanesh', "True")

).filter("New_col == True").drop("New_col").show()输出:

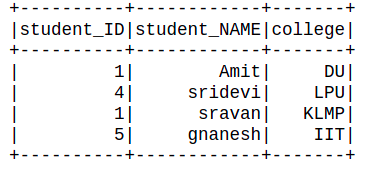

示例 2:

蟒蛇3

# importing module

import pyspark

# importing sparksession from pyspark.sql

# module

from pyspark.sql import SparkSession

# spark library import

import pyspark.sql.functions

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of students data

data = [["1", "Amit", " DU"],

["2", "Mohit", "DU"],

["3", "rohith", "BHU"],

["4", "sridevi", "LPU"],

["1", "sravan", "KLMP"],

["5", "gnanesh", "IIT"]]

# specify column names

columns = ['student_ID', 'student_NAME', 'college']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

dataframe = dataframe.filter(

((dataframe.college != "DU")

& (dataframe.student_ID != "3"))

)

dataframe.show()

输出:

方法二:使用when()方法

它评估条件列表并返回单个值。因此,传递条件及其所需的值将完成工作。

Syntax: When( Condition, Value)

Parameters:

- Condition: Boolean or columns expression.

- Value: Literal Value

例子:

蟒蛇3

# importing module

import pyspark

# importing sparksession from pyspark.sql

# module

from pyspark.sql import SparkSession

# spark library import

import pyspark.sql.functions

# spark library import

from pyspark.sql.functions import when

# creating sparksession and giving an app name

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# list of students data

data = [["1", "Amit", " DU"],

["2", "Mohit", "DU"],

["3", "rohith", "BHU"],

["4", "sridevi", "LPU"],

["1", "sravan", "KLMP"],

["5", "gnanesh", "IIT"]]

# specify column names

columns = ['student_ID', 'student_NAME', 'college']

# creating a dataframe from the lists of data

dataframe = spark.createDataFrame(data, columns)

dataframe.withColumn('New_col',

when(dataframe.student_ID != '5', "True")

.when(dataframe.student_NAME != 'gnanesh', "True")

).filter("New_col == True").drop("New_col").show()

输出: