R 编程中的 XGBoost

XGBoost 是一种快速高效的算法,被许多机器学习竞赛的获胜者使用。 XG Boost 仅适用于数值变量。

R中的XGBoost

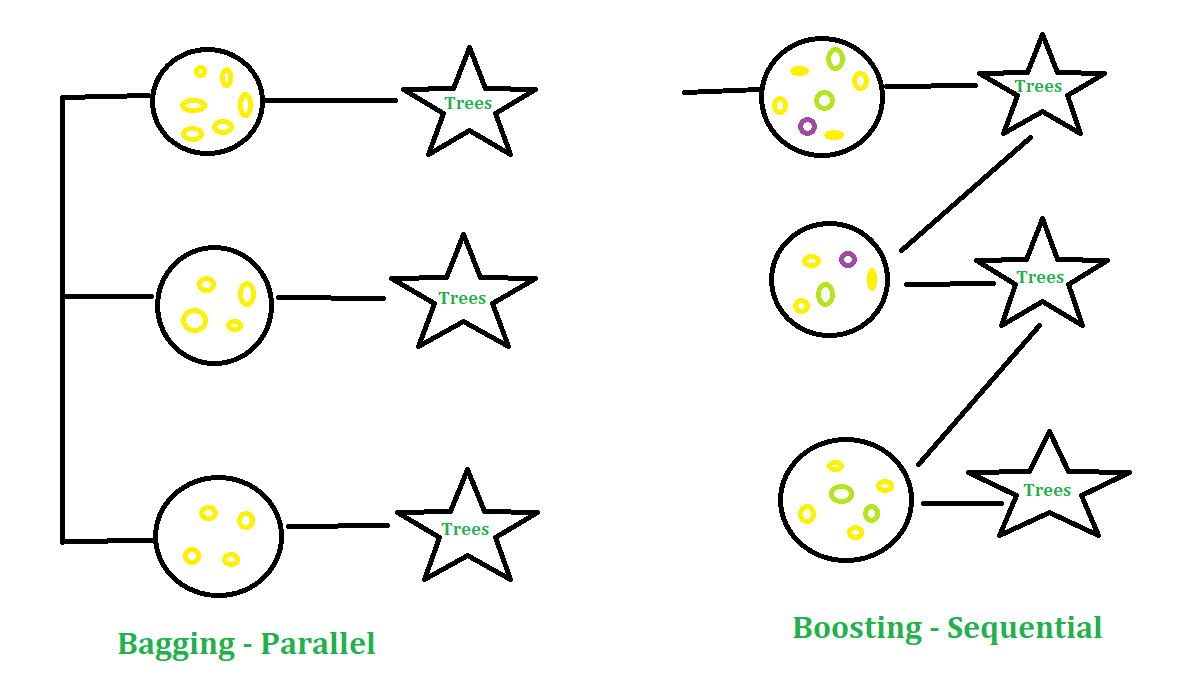

它是增强技术的一部分,其中样本的选择更加智能地对观察结果进行分类。在 C++、R、 Python、Julia、 Java和 Scala 中有 XGBoost 的接口。 XGBoost 中的核心功能是用 C++ 实现的,因此很容易在不同的接口之间共享模型。根据 CRAN 镜像的统计,该软件包已被下载超过 81,000 次。 XgBoost 建模由两种技术组成:Bagging 和 Boosting。

- Bagging :这是一种可以随机抽取数据样本、构建学习算法并采用简单方法来查找 bagging 概率的方法。

- Boosting :这是一种更智能地选择方法的方法,即对观察进行分类的权重越来越大。

XGBoost 中的参数

- eta:它缩小了特征权重以使提升过程更加保守。范围从 0 到 1。它也称为学习率或收缩因子。低 eta 值表示模型对过度拟合更稳健。

- gamma:gamma值越大,算法越保守。它的范围是从 0 到无穷大。

- max_depth:可以使用 max_depth 参数指定树的最大深度。

- 子样本:它是模型将随机选择来种植树木的行的比例。

- colsample_bytree:它是随机选择的用于构建模型中每棵树的变量的比率。

数据集

Big Mart 数据集包含不同城市 10 家商店的 1559 种产品。每个产品和商店的某些属性已被定义。它由 12 个特征组成,即 Item_Identifier(是分配给每个不同项目的唯一产品 ID)、Item_Weight(包括产品的重量)、Item_Fat_Content(描述产品是否低脂)、Item_Visibility(提到产品的百分比)分配给特定产品的商店中所有产品的总展示面积),Item_Type(描述该项目所属的食品类别),Item_MRP(产品的最高零售价格(标价)),Outlet_Identifier(分配的唯一商店ID。它由一个长度为 6 的字母数字字符串组成,Outlet_Establishment_Year(提及商店成立的年份),Outlet_Size(根据所覆盖的地面面积告诉商店的大小),Outlet_Location_Type(讲述所在城市的大小)商店所在的位置)、Outlet_Type(说明该商店是杂货店还是某种超市)和 Item_Outlet_Sales(特定商店中产品的销售额)。

R

# Loading data

train = fread("Train_UWu5bXk.csv")

test = fread("Test_u94Q5KV.csv")

# Structure

str(train)R

# Installing Packages

install.packages("data.table")

install.packages("dplyr")

install.packages("ggplot2")

install.packages("caret")

install.packages("xgboost")

install.packages("e1071")

install.packages("cowplot")

# Loading packages

library(data.table) # for reading and manipulation of data

library(dplyr) # for data manipulation and joining

library(ggplot2) # for ploting

library(caret) # for modeling

library(xgboost) # for building XGBoost model

library(e1071) # for skewness

library(cowplot) # for combining multiple plots

# Setting test dataset

# Combining datasets

# add Item_Outlet_Sales to test data

test[, Item_Outlet_Sales := NA]

combi = rbind(train, test)

# Missing Value Treatment

missing_index = which(is.na(combi$Item_Weight))

for(i in missing_index){

item = combi$Item_Identifier[i]

combi$Item_Weight[i] = mean(combi$Item_Weight

[combi$Item_Identifier == item],

na.rm = T)

}

# Replacing 0 in Item_Visibility with mean

zero_index = which(combi$Item_Visibility == 0)

for(i in zero_index){

item = combi$Item_Identifier[i]

combi$Item_Visibility[i] = mean(

combi$Item_Visibility[combi$Item_Identifier == item],

na.rm = T)

}

# Label Encoding

# To convert categorical in numerical

combi[, Outlet_Size_num :=

ifelse(Outlet_Size == "Small", 0,

ifelse(Outlet_Size == "Medium", 1, 2))]

combi[, Outlet_Location_Type_num :=

ifelse(Outlet_Location_Type == "Tier 3", 0,

ifelse(Outlet_Location_Type == "Tier 2", 1, 2))]

combi[, c("Outlet_Size", "Outlet_Location_Type") := NULL]

# One Hot Encoding

# To convert categorical in numerical

ohe_1 = dummyVars("~.",

data = combi[, -c("Item_Identifier",

"Outlet_Establishment_Year",

"Item_Type")], fullRank = T)

ohe_df = data.table(predict(ohe_1,

combi[, -c("Item_Identifier",

"Outlet_Establishment_Year", "Item_Type")]))

combi = cbind(combi[, "Item_Identifier"], ohe_df)

# Remove skewness

skewness(combi$Item_Visibility)

skewness(combi$price_per_unit_wt)

# log + 1 to avoid division by zero

combi[, Item_Visibility := log(Item_Visibility + 1)]

# Scaling and Centering data

# index of numeric features

num_vars = which(sapply(combi, is.numeric))

num_vars_names = names(num_vars)

combi_numeric = combi[, setdiff(num_vars_names,

"Item_Outlet_Sales"), with = F]

prep_num = preProcess(combi_numeric,

method = c("center", "scale"))

combi_numeric_norm = predict(prep_num, combi_numeric)

# removing numeric independent variables

combi[, setdiff(num_vars_names,

"Item_Outlet_Sales") := NULL]

combi = cbind(combi,

combi_numeric_norm)

# Splitting data back to train and test

train = combi[1:nrow(train)]

test = combi[(nrow(train) + 1):nrow(combi)]

# Removing Item_Outlet_Sales

test[, Item_Outlet_Sales := NULL]

# Model Building: XGBoost

param_list = list(

objective = "reg:linear",

eta = 0.01,

gamma = 1,

max_depth = 6,

subsample = 0.8,

colsample_bytree = 0.5

)

# Converting train and test into xgb.DMatrix format

Dtrain = xgb.DMatrix(

data = as.matrix(train[, -c("Item_Identifier",

"Item_Outlet_Sales")]),

label = train$Item_Outlet_Sales)

Dtest = xgb.DMatrix(

data = as.matrix(test[, -c("Item_Identifier")]))

# 5-fold cross-validation to

# find optimal value of nrounds

set.seed(112) # Setting seed

xgbcv = xgb.cv(params = param_list,

data = Dtrain,

nrounds = 1000,

nfold = 5,

print_every_n = 10,

early_stopping_rounds = 30,

maximize = F)

# Training XGBoost model at nrounds = 428

xgb_model = xgb.train(data = Dtrain,

params = param_list,

nrounds = 428)

xgb_model

# Variable Importance

var_imp = xgb.importance(

feature_names = setdiff(names(train),

c("Item_Identifier", "Item_Outlet_Sales")),

model = xgb_model)

# Importance plot

xgb.plot.importance(var_imp)

在数据集上执行 XGBoost

在数据集上使用 XGBoost 算法,该数据集包括 12 个特征和 1559 种产品,分布在不同城市的 10 家商店。

R

# Installing Packages

install.packages("data.table")

install.packages("dplyr")

install.packages("ggplot2")

install.packages("caret")

install.packages("xgboost")

install.packages("e1071")

install.packages("cowplot")

# Loading packages

library(data.table) # for reading and manipulation of data

library(dplyr) # for data manipulation and joining

library(ggplot2) # for ploting

library(caret) # for modeling

library(xgboost) # for building XGBoost model

library(e1071) # for skewness

library(cowplot) # for combining multiple plots

# Setting test dataset

# Combining datasets

# add Item_Outlet_Sales to test data

test[, Item_Outlet_Sales := NA]

combi = rbind(train, test)

# Missing Value Treatment

missing_index = which(is.na(combi$Item_Weight))

for(i in missing_index){

item = combi$Item_Identifier[i]

combi$Item_Weight[i] = mean(combi$Item_Weight

[combi$Item_Identifier == item],

na.rm = T)

}

# Replacing 0 in Item_Visibility with mean

zero_index = which(combi$Item_Visibility == 0)

for(i in zero_index){

item = combi$Item_Identifier[i]

combi$Item_Visibility[i] = mean(

combi$Item_Visibility[combi$Item_Identifier == item],

na.rm = T)

}

# Label Encoding

# To convert categorical in numerical

combi[, Outlet_Size_num :=

ifelse(Outlet_Size == "Small", 0,

ifelse(Outlet_Size == "Medium", 1, 2))]

combi[, Outlet_Location_Type_num :=

ifelse(Outlet_Location_Type == "Tier 3", 0,

ifelse(Outlet_Location_Type == "Tier 2", 1, 2))]

combi[, c("Outlet_Size", "Outlet_Location_Type") := NULL]

# One Hot Encoding

# To convert categorical in numerical

ohe_1 = dummyVars("~.",

data = combi[, -c("Item_Identifier",

"Outlet_Establishment_Year",

"Item_Type")], fullRank = T)

ohe_df = data.table(predict(ohe_1,

combi[, -c("Item_Identifier",

"Outlet_Establishment_Year", "Item_Type")]))

combi = cbind(combi[, "Item_Identifier"], ohe_df)

# Remove skewness

skewness(combi$Item_Visibility)

skewness(combi$price_per_unit_wt)

# log + 1 to avoid division by zero

combi[, Item_Visibility := log(Item_Visibility + 1)]

# Scaling and Centering data

# index of numeric features

num_vars = which(sapply(combi, is.numeric))

num_vars_names = names(num_vars)

combi_numeric = combi[, setdiff(num_vars_names,

"Item_Outlet_Sales"), with = F]

prep_num = preProcess(combi_numeric,

method = c("center", "scale"))

combi_numeric_norm = predict(prep_num, combi_numeric)

# removing numeric independent variables

combi[, setdiff(num_vars_names,

"Item_Outlet_Sales") := NULL]

combi = cbind(combi,

combi_numeric_norm)

# Splitting data back to train and test

train = combi[1:nrow(train)]

test = combi[(nrow(train) + 1):nrow(combi)]

# Removing Item_Outlet_Sales

test[, Item_Outlet_Sales := NULL]

# Model Building: XGBoost

param_list = list(

objective = "reg:linear",

eta = 0.01,

gamma = 1,

max_depth = 6,

subsample = 0.8,

colsample_bytree = 0.5

)

# Converting train and test into xgb.DMatrix format

Dtrain = xgb.DMatrix(

data = as.matrix(train[, -c("Item_Identifier",

"Item_Outlet_Sales")]),

label = train$Item_Outlet_Sales)

Dtest = xgb.DMatrix(

data = as.matrix(test[, -c("Item_Identifier")]))

# 5-fold cross-validation to

# find optimal value of nrounds

set.seed(112) # Setting seed

xgbcv = xgb.cv(params = param_list,

data = Dtrain,

nrounds = 1000,

nfold = 5,

print_every_n = 10,

early_stopping_rounds = 30,

maximize = F)

# Training XGBoost model at nrounds = 428

xgb_model = xgb.train(data = Dtrain,

params = param_list,

nrounds = 428)

xgb_model

# Variable Importance

var_imp = xgb.importance(

feature_names = setdiff(names(train),

c("Item_Identifier", "Item_Outlet_Sales")),

model = xgb_model)

# Importance plot

xgb.plot.importance(var_imp)

输出:

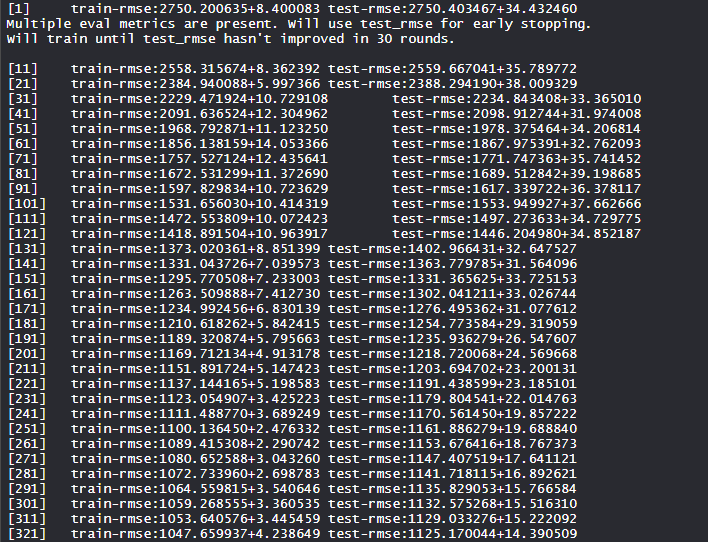

- Xgboost模型的训练:

xgboost 模型经过训练,计算 train-rmse 分数和 test-rmse 分数,并在多轮中找到其最低值。

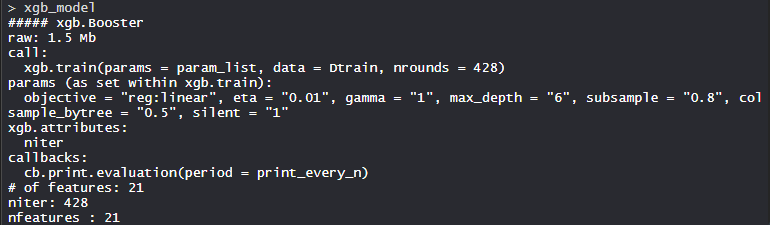

- 型号 xgb_model:

XgBoost 模型由 21 个特征组成,目标为线性回归,eta 为 0.01,gamma 为 1,max_depth 为 6,subsample 为 0.8,colsample_bytree = 0.5,silent 为 1。

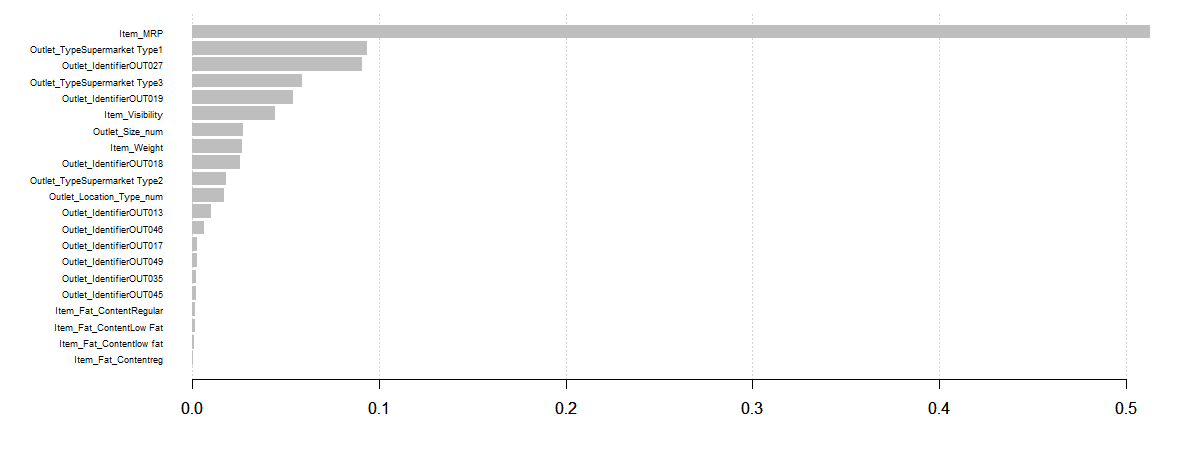

- 变量重要性图:

Item_MRP 是最重要的变量,其次是 Item_Visibility 和 Outlet_Location_Type_num。

因此,Xgboost 在许多行业领域都有应用,并被满负荷使用。