毫升 |逻辑回归中的成本函数

在线性回归的情况下,成本函数是 -

但是对于逻辑回归,

它将导致非凸成本函数。但这会导致成本函数具有局部最优值,这是梯度下降计算全局最优值的一个非常大的问题。

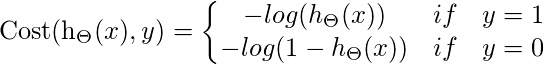

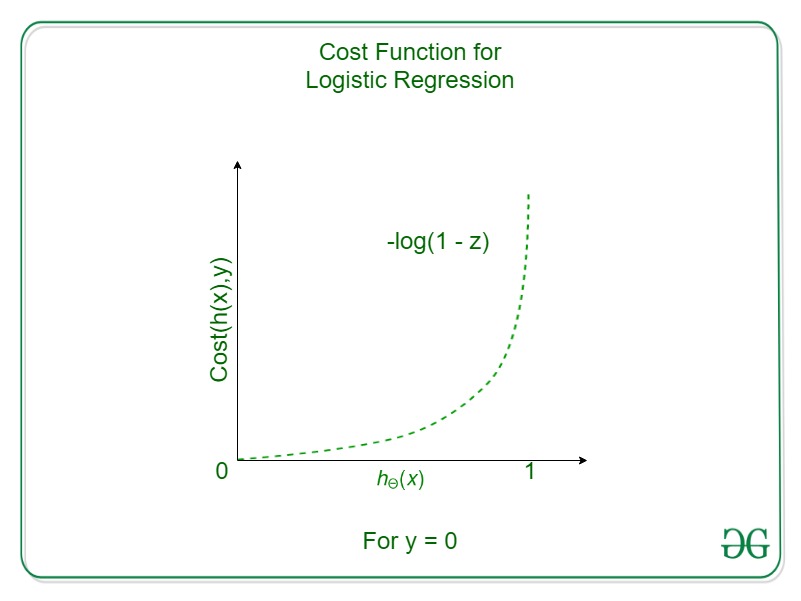

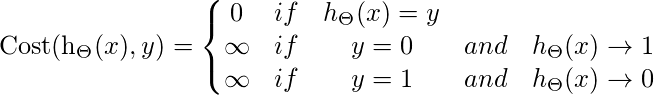

因此,对于 Logistic 回归,成本函数为

如果 y = 1

如果 y = 1,则成本 = 0,h θ (x) = 1

但作为,

h θ (x) -> 0

成本 -> 无穷大

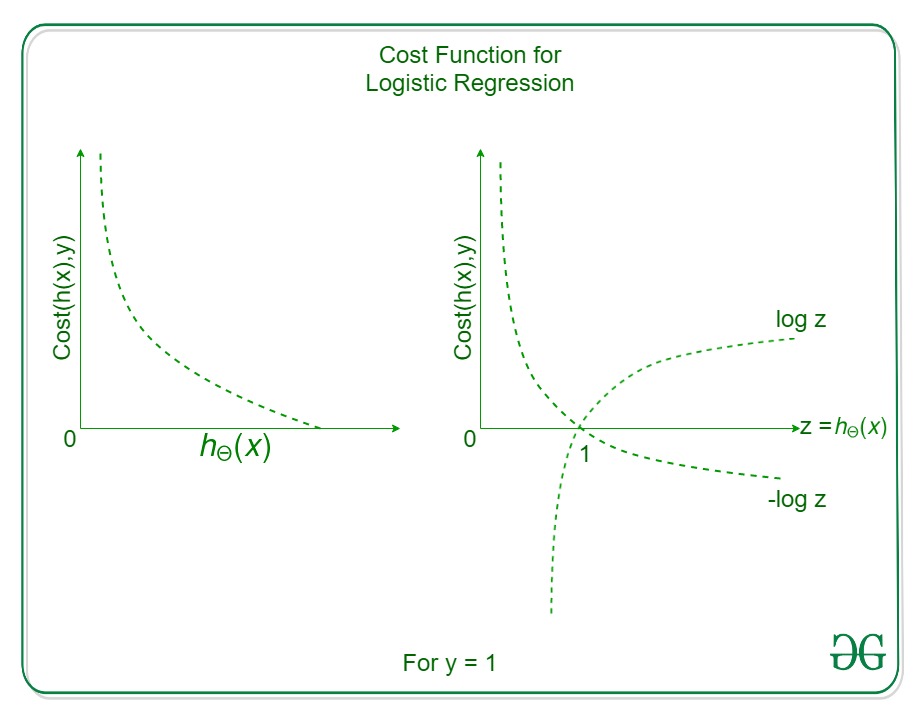

如果 y = 0

所以,

为了拟合参数θ ,J(θ) 必须最小化,并且需要梯度下降。

梯度下降——看起来类似于线性回归,但不同之处在于假设 h θ (x)